-0.15 Phase

-0.1 Topology of time

-0.05 Stitching time

0 Emergent properties

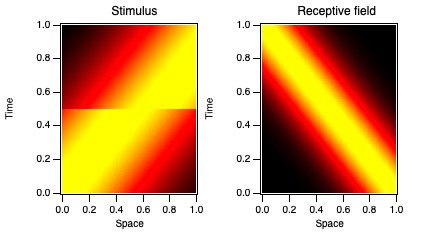

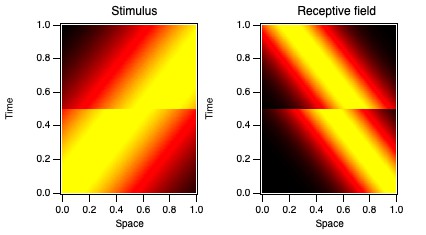

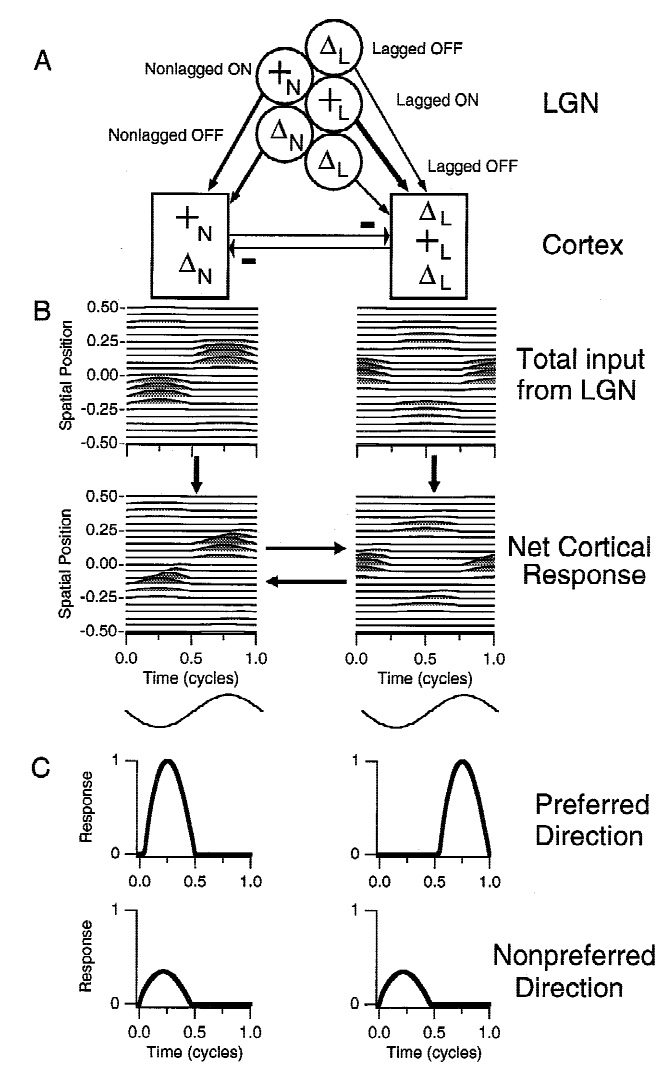

0.05 Direction selectivity

0.1 Adaptation and direction selectivity

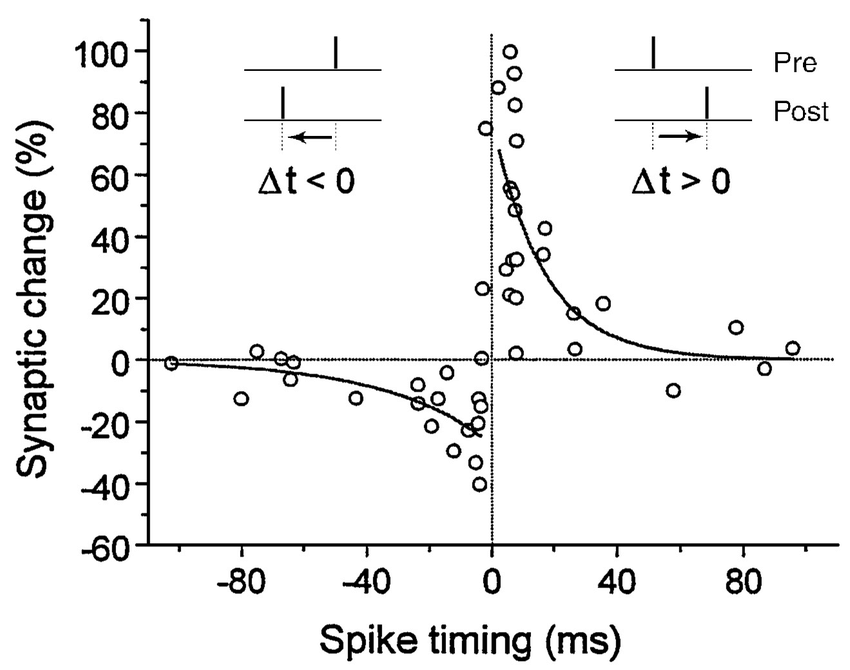

0.15 Learning direction

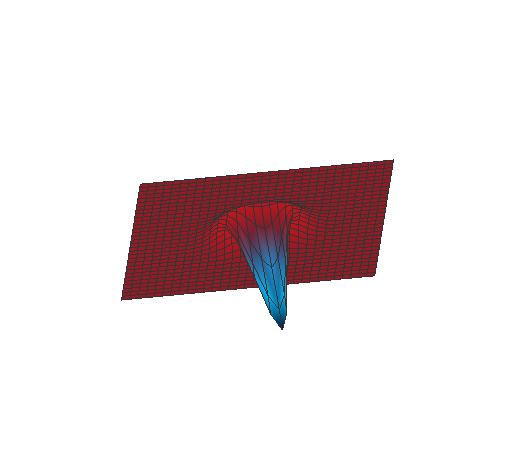

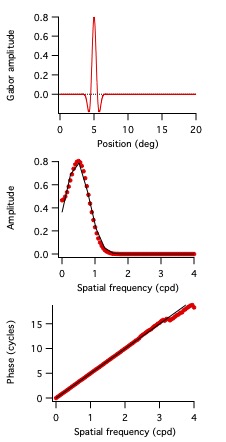

0.2 Receptive fields as sheaves

0.25 Globalization

0.3 Behavior

0.35 Physics and Philosophy

0.4 The direction of time

0.45 Music

0.5 Film

0.55 Arguing

0.6 Appendix

0.7 Afterword

A fascinating group of people living in Amazonia, the Piraha,

typically do not regard the past and future as meaningful.

As anthropologist/linguist Daniel Everett describes them, unless they experienced something for themselves,

or were told about something by somebody else who they know, it doesn't exist.

That probably seems foreign to most readers. We are commonly taught about history,

with a desire to go way back, and even learn about many things that are not likely to be relevant to us, ever.

We frequently consider and imagine future expectations.

Time travel fascinates many people, unlikely though it might be. Our view of time reflects these obsessions.

But to what extent are these acquired and conditioned? Take a few minutes to think about how you view time.

What picture would you draw to illustrate time?

Most of us were probably taught in early childhood that time should be thought of as a line,

projecting back to the past toward the left and projecting forward to the future toward the right.

This model of time dominates thinking in Western science.

Time is considered to be one-dimensional, and totally ordered.

That means that a relation between points in time exists where we can say that for any two points,

one comes before the other (Fig. 1). That sounds natural to most people, but is subject to question.

The best-known such question is which came first, the chicken or the egg? Similarly, which comes first, noon or midnight?

Such counterexamples illustrate that there might be a problem with this conventional notion of time as totally ordered points on a line.

The problem is that points in time do not correspond to when things occur.1

Conventional views of time

Timeline, moments, simultaneity, reality of time, alternative views

Despite this idea that time is a sort of track that we ride on in a single direction, we can't directly observe where we are on that rail.

Einstein showed that this view of time as points on a line doesn't make physical sense.

He detailed how, in order to measure two events as having happened at the same time,

observations of the events are needed, and these observations take time because of the finite speed of light

(the highest speed that can be reached, see Physics and Philosophy below).

If two stars at opposite ends of our galaxy went to supernova,

they might appear to explode simultaneously to your friend Stella equidistant from them,

even though they will appear to occur at different times to you out at a point nearer to one of the stars (Movie 1).

Which came first, α or β? So time depends on space (and other factors).

We are accustomed to the lack of simultaneity of our clocks located at different places on the earth.

We have conventionally designated 24 (plus some odd ones) time zones,

with the clock time increasing by one hour

(with exceptions like Newfoundland)

when crossing into a new zone from west to east.

We even deal with the fact that this arrangement increments the date at the International Date Line

when going east to west.

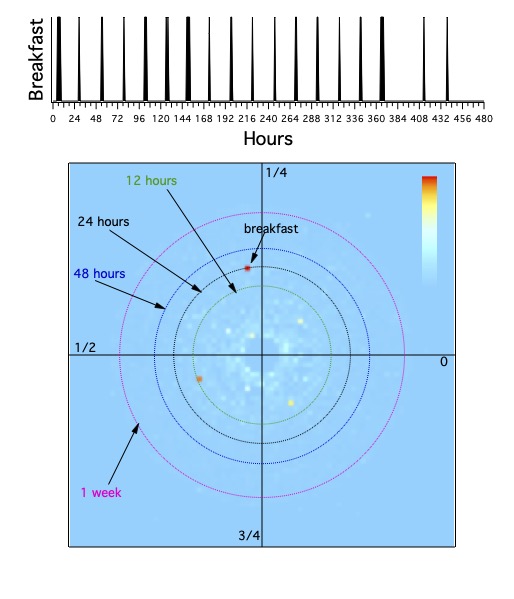

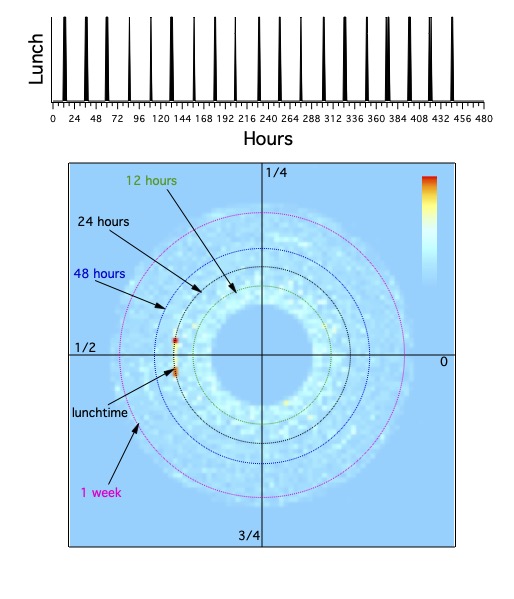

The reason for this lack of apparent simultaneity across the globe is that we like to have clock times

correspond with the rotation of the earth. This makes sunrise and breakfast occur around 6 am, high noon and lunch around 12,

and sunset and dinner around 6 pm. People in different time zones do not eat their meals simultaneously.

Note that those correspondences of sunrise and sunset are only true at a set of disjoint points

on the equator and on the vernal and autumnal equinoxes. More importantly, the correspondence varies with latitude.

The clock times of sunrise and sunset vary with the seasons.

Even the seasons are reversed between the north and south over the period of a year.

And more tropical regions don't have the seasons those of us in the north and south experience: wet and dry seasons are typical.

We are less accustomed to the fact that a clock sitting on a mantel runs faster than an identical clock on the floor.

The difference is quite small, but we have clocks that are precise enough to measure it. As Einstein also showed, time is affected by gravity.

Gravity is actually the same thing as space, so the effect of gravity on time can be seen as warping spacetime.

All of these phenomena become clearer when working in the frequency domain, as discussed throughout this treatise.

In the frequency domain, we rely on frequency and phase. Sometimes period, the reciprocal of frequency, substitutes.

The daily (period of 24 hours) rotation of the earth gives us time zones (phases),

and the annual (period of a year) orbit of the tilted earth around the sun produces seasons (phases).

We understand that spatial phase varies continuously around the lines of latitude,

and temporal phase is equal to that at the period of a day.

Greenwich England's longitude (0°) and New Orleans USA's longitude (90°) are separated by ¼ of the way around the earth,

meaning they are 6 hours apart, ¼ of the day (during standard time).

Gaborone Botswana and Tallinn Estonia are at about the same longitude, but differ in latitude by about 84°,

making them about ¼ cycle apart on a south-north-south great circle.

Sunrise that occurs at 6:48 am in Gaborone occurs at 5:23 am in Tallinn on August 9.

We can easily calculate these astronomic predictions in the frequency domain, based on phases and periods.

Analogous practical and theoretical gains are obtained in neuroscience.

We only know about time because our brains have neurons whose activity changes with time.2

As Einstein recognized that an observer is needed to know about timing,

we need to recognize that our brains need to respond to changes in the world with changes in activity

to know about timing. Like the finite speed of light,

neurons only respond to changes in the world after some finite time, usually thought of as latency.

Our brains don't know when an event occurs, it only has the information from its activity,

and that activity does not change simultaneously with the event.

The time when an event occurs can only be known relative to when another event occurs.

Philosophers have long argued about the reality of time. One of my childhood heroes,

Parmenides3,

considered time to be an illusion: "coming into being is extinguished and perishing is unheard of."

Although this concept is fairly common in philosophy (e.g., St. Augustine,

Avicenna,

Bergson,

and Russell),

most of western science takes time to have some reality.

In physics, time is often combined with space into one structure that is influenced by matter, although interpretations can vary.

Einstein, and many other physicists, agree(d) with Parmenides that time is an illusion (perhaps).

A physical description of the universe has been proposed, the Wheeler-DeWitt equation, in which time does not exist.

Outsider physicist Julian Barbour (The Nature of Time) shows how time is an abstraction.4

He quotes his touchstone Ernst Mach: "It is utterly beyond our power to measure the changes of things by time ...

time is an abstraction at which we arrive by means of the changes of things;

made because we are not restricted to any one definite measure, all being interconnected."

Barbour himself echoes Parmenides: "The [theory] I favour seems initially impossible:

the quantum universe is static. Nothing happens; there is being but no becoming. The flow of time and motion are illusions."

Barbour proposes a lovely metaphor: "Unlike the Emperor dressed in nothing, time is nothing dressed in clothes.

I can only describe the clothes."

When we supposedly measure time, we are using one process that depends on time to measure another process that depends on time.

Galileo supposedly measured his pulse rate by counting how many oscillations a chandelier made.

Rovelli chapter 7 provides an excellent discussion of this, rephrasing Barbour's quote above:

"'Physics without time' is physics where we speak only of the pulse and the chandelier, without mentioning time."

A runner's pace is measured with a stopwatch that counts ticks of some kind produced by the pendulum-like mechanisms inside the watch.

Mach recognized that time doesn't have any yardstick of its own.

Carlo Rovelli ends the first section of his book The Order of Time with these thoughts:

Werner Heisenberg established famed limits on the precision to which time can be measured.

The Planck time (on the order of 10-43 seconds - unimaginably precise, practically speaking;

the Planck length is about 10-33 centimeters) can be considered as the smallest period of time.

Points on the time line are called moments, and receive a lot of attention. Often, the most important moment is thought of as "now", quite an elusive concept (Muller; note his discussion of free will at about 50:40).

Experiences are associated with moments in time. Those experiences have some duration, however,

so we should be leery of moments as single points in time. Whether or not two moments can overlap in time seems unclear.

"There is no nature apart from transition, and there is no transition apart from temporal duration.

This is why an instant of time, conceived as a primary simple fact, is nonsense" (Whitehead 1938, p. 207).

Most neuroscientists might argue that our experiences do occur at moments in time, and can be thought of as events,

with brief durations. These events are associated with activity in neurons, and the time between an event and the neuronal activity,

the latency of the response, is often considered the basis for our sense of time.

To repeat for emphasis, people think this even though the only knowledge we have is when the activity occurred relative to other neural activity -

we don't directly know when the event occurred. Furthermore, our experience is seldom localized in time to the degree expected from that view.

When a very brief experience occurs, in order to be detectible it needs to be strong.

We don't experience weak, subtle things that are only present for a few milliseconds, especially considering that our experiences do not occur in isolation,

but instead over a complicated background that can be considered noise in the context of detecting a particular feature.

Consider that much of our experience consists of feelings, and whether it's even possible to experience an emotion for only milliseconds.

More importantly, most neuroscientists, and most people in general, think of time in terms of conscious processes.

Almost all of what the brain does does not enter our consciousness.

We think about time often enough. For example, when we're late we think about having to go faster.

But when we hear somebody talking, we normally don't think about how the sounds change over time.

When we watch leaves rustling in the wind, or waves at the beach, we don't normally pay attention to the timing of their motion.

When we walk, we don't normally worry about how we're moving our feet. Everything we do, we do over time.

We are unaware of most everything we do.

Throughout this book, it might help to keep in mind that I am primarily addressing unconscious processes.

The neurons I describe reside mostly in parts of the brain that only indirectly participate in conscious perception.

But they all depend on temporal processing to perform their functions.

Neglect of these billions of neurons has led to experiments and theories that do not capture how the brain processes time.

Daniel Everett argued that the Piraha language lacks words that correspond to concepts that other people take for granted,

and because those words don't exist, the concepts don't exist. They do not use words for colors or numbers, for example,

and do not perceive the world in those terms. On the other hand, they have a rich language in which they discuss their lives in the forest.

Our language for discussing time is impoverished by our conditioned concepts of one-dimensional time.

I discuss throughout this book how to enrich our concepts.

Buzsaki and Tingley argued that neuroscience does not require the concepts of space and time

to make sense of how the brain (at least the part of the brain they considered, the hippocampus and related structures)

performs its computations. Unfortunately, they emphasize that time and space are not represented by the brain.

I would question whether anything is represented in the brain. Instead, I regard brain function in terms of causality.

Neuronal activity causes perceptions, emotions and behavior. And time.

The brain is most often modeled as a hierarchy of areas. The simple notion that most neuroscientists rely on

is that an event in the world causes changes in neuronal activity in one brain area, after a brief latency.

This activity is then conducted to other areas in the brain with an additional delay, and then on to further areas

moving up a hierarchy, with delays between each stage. For this simple notion,

the hierarchy could be defined by the latency of the response in each area, shorter at the bottom of the hierarchy

and increasing proceeding up the hierarchy.

Lots of things are wrong with this hierarchical idea. First, as a general complaint, the concept of a hierarchy

probably occurs to people because we are conditioned to think in these terms.

Military, academic, business, and numerous other social systems are organized hierarchically.

We impose our conditioned concepts on other systems like the brain. This view is contrary to the conventional idea

that hierarchies are essential to the way we think.

Second, brain activity is poorly localized in time. That is, action potentials (also known as spikes5),

the main form of neuronal activity, do not just occur at a moment, and at all other times the neuron is silent.

If you hear a sudden sound, many neurons will suddenly change their activity right after the sound occurs.

But when you hear somebody talking to you, neurons are continually activated, firing action potentials at different rates over time.

The simple notion assumes wrongly that neurons are silent until a stimulus comes along.

My students were taught this lesson by the following exam question.6 You are invited to Dubai to give a talk.

At the end of the day of your presentation, you go back to your hotel, and ascend to your room on the 70th floor.

You are exhausted and get into the shower. As soon as you turn it on, you have hot water. As you relax, you ponder how it is that the hot water comes on so quickly.

The boilers in the basement almost 300 meters below heat the water, and it has to take time to pump that water all the way up.

How is this done?

The lesson is that the water is continuously circulated. Opening a tap simply lets the nearby hot water flow through the local outlet.

The brain similarly circulates activity continuously. Neurons are active much of the time, and what counts is how that activity changes over time.

When something happens, activity increases or decreases, and those changes in signaling drive behaviors.

This makes it hard to measure latency, and to use it for figuring out how the brain works.

We have a geothermal system at home that consists of four 180 foot deep holes,

from which fluid is circulated through a heat pump to cool the house in the summer and heat it in winter.

The pump does not need to work so hard to move the water up the 180 feet, since it circulates with the water that drops 180 feet.

A similar system moves inclines up and down hills (Duquesne Incline).

Another analogy is how electricity flows through wires. Electrons do not travel far, they move somewhat randomly (it's actually

more interesting than that: Quantum effects),

but the very large bulk of electrons shifts in one direction (a bit like Movie 12). In neurons, ions don't so much flow through channels in the membrane,

but, like the electrons, just move microscopic distances to carry the electric current through their distributions on each side of the membrane.

Our intuition is often not helpful at very small or large scales. Like brain activity, charges are poorly localized.

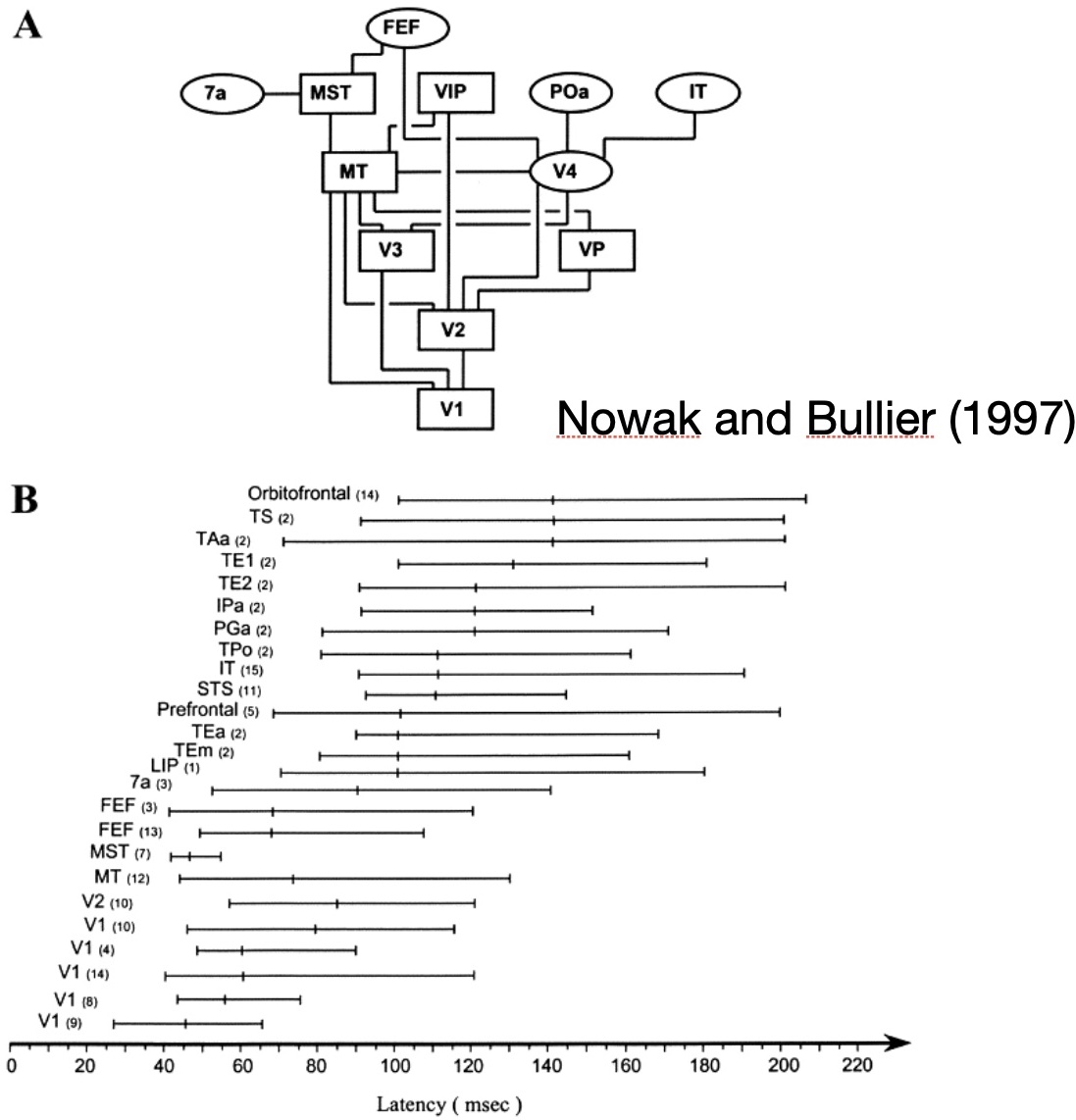

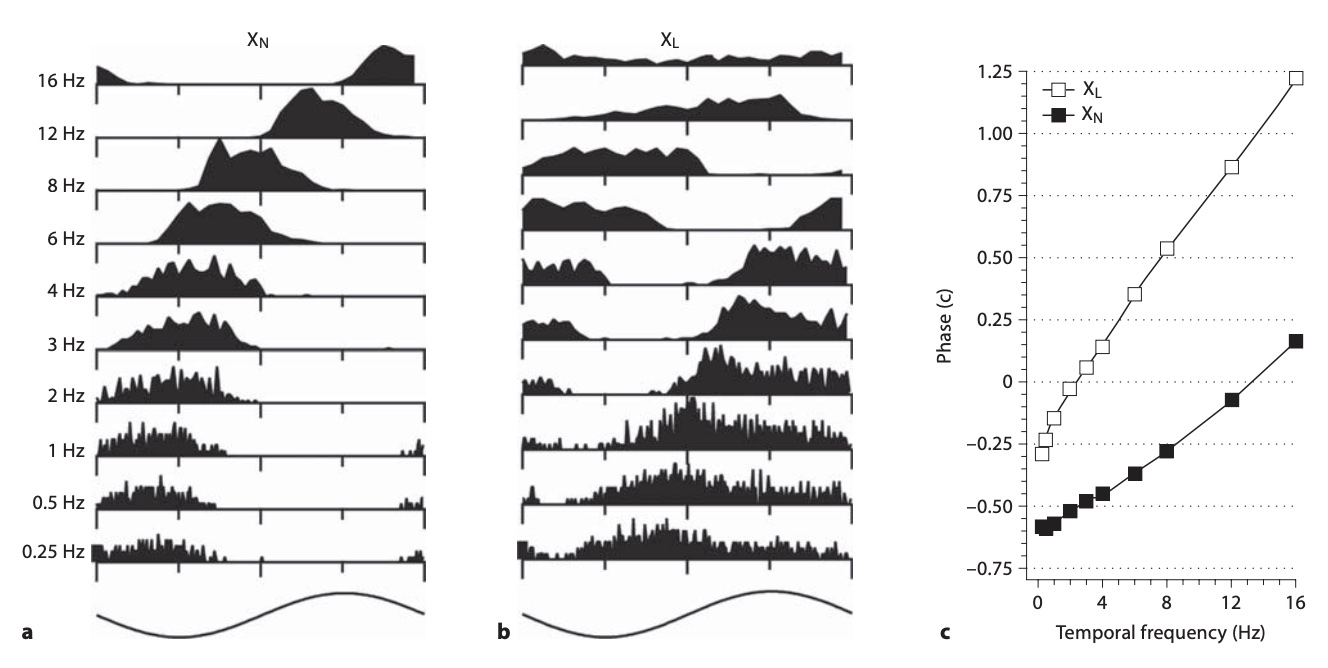

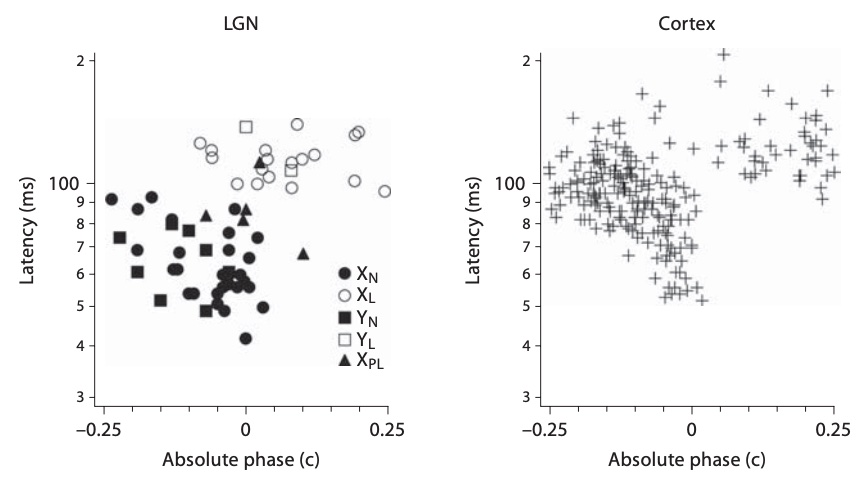

Third, when activity in different parts of the brain is actually measured and latencies are determined,

each area has neurons that respond over a wide range of latencies

(Fig. 2; this is Figure 4 in Nowak and Bullier 1997 that provides far more depth).

These latency ranges overlap across different areas. In other words, all of the areas are firing action potentials at the same time.

Activity does not just propagate up a hierarchy. Nowak and Bullier conclude "Thus, if one assumes that latencies to visual stimuli provide a reasonable estimate

of the order of activation of cortical areas, it appears that the order does not follow the one suggested by the anatomical hierarchy of cortical areas."

We will see that there are properties of the brain that are consistent with a hierarchy,

but need to be careful when modeling the brain. In particular, latency does not provide a useful way to determine a hierarchy.

We will consider whether duration maps into a hierarchy from the back toward the front of the brain.

To get to the heart of how to actually think of time, we measure time based on two dimensions (using Barbour's metaphor, time is dressed in two dimensions):

a series of values ("phases") telling where we are in each of a set of arbitrary periods (e.g., the year, the month, the week, the day, the hour, the minute, the time it takes to get dressed, ...).

Phase and period (or its reciprocal, frequency) are the two dimensions.

Most of the world (we argue that everybody, even though they don't recognize it) uses the two-dimensional view of time. The Mayan calendar (Fig. 3) is one well-known example.

It consists of a large set of periods, with names for the days and months giving the phases.

Despite most people not being explicitly aware of it, we almost always use two-dimensional representations of time.

Our clocks tell us at least two sets of periods and phases, as do our calendars.

The conventional view of time does consider more than latency. Often, duration is measured.

An example is to estimate how long an audible tone is present, as when a musician plays a rhythm with sustained notes of different durations.

We are frequently conscious of durations, growing impatient when something takes longer than we expect, for instance.

Barbour focuses on duration. Although this is an important subject that we will touch on below (the period/frequency dimension becomes important),

our focus will be on timing. To be definite, timing involves when things happen, relative to when other things happen, and is measured mostly by the phase dimension.

Time only exists because we detect changes in the world, because of changes in our brain activity.

These changes can be regarded as generalized motion, the change of some set of neuronal activations, or, in physical terms, changes in the state of the universe.

We will return to the importance of thinking about time in terms of motion.

Motion has two components, speeds and directions. Direction will play the larger role.

The thesis here is:

This treatise will probably appear to be deadly serious. So remember to get your laughs wherever they might be.

Listening to this might help.

Footnotes

1 An obvious resolution to this problem is that a series of events occurred over a long time that resulted in the evolution of birds that laid eggs. And that noon and midnight are series of points on the line.

The point here, as detailed below, is that we often regard noon and midnight as singular events:

the many noons and the many midnights can each represent one thing to us.

Much of what we experience is repeated in a similar fashion over time, and we think of those repeated activities as sharing a single time course.↩

2 Circular reasoning, but the point is that time equals changes in neuronal activity.

This is an issue that is present throughout this book, that I don't reject the word "time" even though I reject a simple interpretation of its referent.

In Philosophy of Language, the usual example is Russell's "The Present King of France."

We understand "time" semantically even though we don't understand what it refers to.↩

3 Parmenides was a 5th century BCE Greek philosopher who lived in Elea, on what is now the Italian coast.

His work is known to us only by a set of fragments written in Epic Hexameter, the style of Homer.

Parmenides' philosophy of Monism, that everything is one, challenged thinkers to falsify his propositions, as he and his pupil Zeno tried to falsify the Milesians' ideas about change.

He got a lot right, including continuity. His monism could be interpreted in terms of things like the "laws of physics" being eternal.↩

4 Abstractions can be regarded as not having existence. Nominalism is a philosophy that rejects abstractions, so that red things exist, but red does not.

Nominalism is discussed further below.

In my second year at Caltech, I was exposed to Linguistics. The professor for this course, Bozena Henisz Dostert Thompson,

spent the first term in her home country of Poland, and her newlywed husband Fred Thompson took over the teaching.

Thompson had diverse training and interests, having studied with logician Alfred Tarski,

and worked in computer science. He developed natural language abilities in computers,

which at the time were not really capable compared to what we have now.

He taught us about Chomsky's theories of grammar,

and I did an independent study with him that I wanted to be about Structuralism,

but he made it primarily a deep dive into Nominalism (Nelson Goodman and Willard Van Orman Quine).

I was slow to appreciate the good parts of Nominalism, but am now committed to avoiding categorization of people.↩

5 One of many beautiful phenomena in the brain, that these types of remarkably complex signals evolved,

with so many accompanying specializations.↩

6 Thanks to Ray and Tom Magliozzi, the car guys.↩

7 Up the hierarchy is defined by projections from a lower area to the middle cortical layer (4) in the upper area.

Projections down the hierarchy go from layer 5 to layer 2/3.↩

When we say what time it is, we provide a series of phase values for several periods.

If it is 10:37 am on Tuesday 10 May 2050,

we mean that it is late morning early in the week about a third of the way through the month almost halfway through the year midway in the century.

These are pairs of values, a phase and a period, for the periods of a day, a week, a month, a year, and a century.

Phase refers to how far around a cycle things lie. We are interested in the set of phase values over a range of periods.

Phase is a property of continuous processes. For discrete situations, in which items can be counted, we learn about doing division,

where remainders are left after dividing one integer by another. For division by 4, the remainders can be 0, 1, 2, or 3.

These remainders are the discrete version of phase. On a clock with 12 hours,

we measure phase over a 12-hour period by saying what hour we are closest to.

On a calendar page, we measure phase by what day it is over a period of a month, for which the period ranges between 28 and 31 days.

The periods we use are somewhat arbitrary, though sometimes derived from some natural phenomena

(the origins of our western units of time go back primarily to the ancient Near East,

developed with the recognition that 12 and 60 have many divisors).

But of course we reckon time in terms of months and years that actually have varying periods.

We might receive paychecks monthly and keep track of payday as a given phase, even though the distance between paydays varies on the timeline.

We rely on the day of the week to organize our lives, even though weeks don't clearly correspond to natural phenomena

(the number of days in a week has varied across cultures). Seconds, minutes, and hours are relatively artificial periods as well

(derived from dividing larger units by factors of 360, i.e. 1, 2, 3, 4, 5, 6, 8, 9, 10, 12, 15, 18, 20, 24, 30, 36, 40, 45, 60, 72, 90, 120, 180, 360).

Ptolemy divided the circle into first and second minutes using successive divisions by 60, giving us what we now term minutes and seconds.

The circle was often divided into 360 degrees, a system that persists for measuring phase.

Phase is also measured relative to 2 times π, as radians, so that halfway around the circle is π and a quarter of the way around is π/2.

I choose to measure phase as the fraction of the way around the circle, so halfway around is ½ and a quarter of the way around is ¼.

The unit of phase is a cycle, so a half cycle and a quarter cycle are sensible quantities.

Remarkably, many people are oblivious to the fact that we divide time into these different periods and the periods into different phases.

One of many examples is the meme that attributes a criticism of the use of Daylight Saving Time to various Native Americans (Fig. 4):

"Only the government would believe that you could cut a foot off the top of a blanket, sew it to the bottom, and have a longer blanket."

The notion that the day resembles the progression from the top to the bottom of a blanket, and, worse,

that days don't change their behaviors over the course of a year with different seasons,

seems more likely to arise from a modern urbanite in less touch with nature than at least the stereotype of Native Americans.

For most of human history, the earth's rotation was the primary source for measuring time, based on the movement of the sun across the sky during daylight,

and the stars at night. Dividing daylight into 12 hours and the dark phase of the day into another 12 hours requires those hours to vary across space and time,

because these periods change with the seasons and latitude. However, for most purposes, these approximate phases of the day worked well and were useful.

Daylight savings time resets phase in a relatively crude way by jumping by one hour twice a year. We could instead just shift by about 20 seconds every day,

or smaller amounts every hour (about 1 second) or minute (1/60 of a second) or second (1/3600 of a second).

Those adjustments would have their own problems, of course,

because of our technological world that requires lots of synchronization, but might be managed by that technology as well.

We would not be aware of these miniscule resettings of phase.

Phase is a relative quantity. In physics, everything is relative. The same is true in biology

(and geology, chemistry, sociology, anthropology, …),

and we emphasize that phase is the right way to think of biological processes.

Cell division is characterized by a series of phases, for one example.

These phases can take variable durations, but the sequence of events happens predictably: G1, S, G2, and M phases.

Time itself is of course relative.

We discuss phase and period in terms of timing and duration. Phase describes the relative timing between processes,

and period describes the duration being looked at. Phase is usually a function of period.

Two processes differ in their timing based on the phase difference between them at each period.

Lunch and supper differ in their phases at a period of a day. Over a period of an hour,

they may have similar phases in how we go about eating each meal.

Over the duration of two related processes, this behavior of having similar phases might be expected.

Over other periods, phases are expected to differ.

Thus, duration (period or frequency) and phase are two ways of describing time,

and together they capture how things change in time.

We use phase values at a series of periods to tell time, and so does the brain, as explained further below.

Computers, on the other hand, internally count the number of some very short periods, "ticks", since some fairly arbitrary moment.

My computer tells me that it's been 3776668273 seconds since midnight 1 January 1904.

We certainly do not get much information from that sort of time value. But computers do the arithmetic to translate between ticks and the phase values over periods we understand.

It is instructive to consider how we can perform such translations. We can set up rules to take any two dates and estimate the number of seconds between them.

Those rules can be a bit complicated because of the variability in the periods we use. But if we rely only on fixed periods,

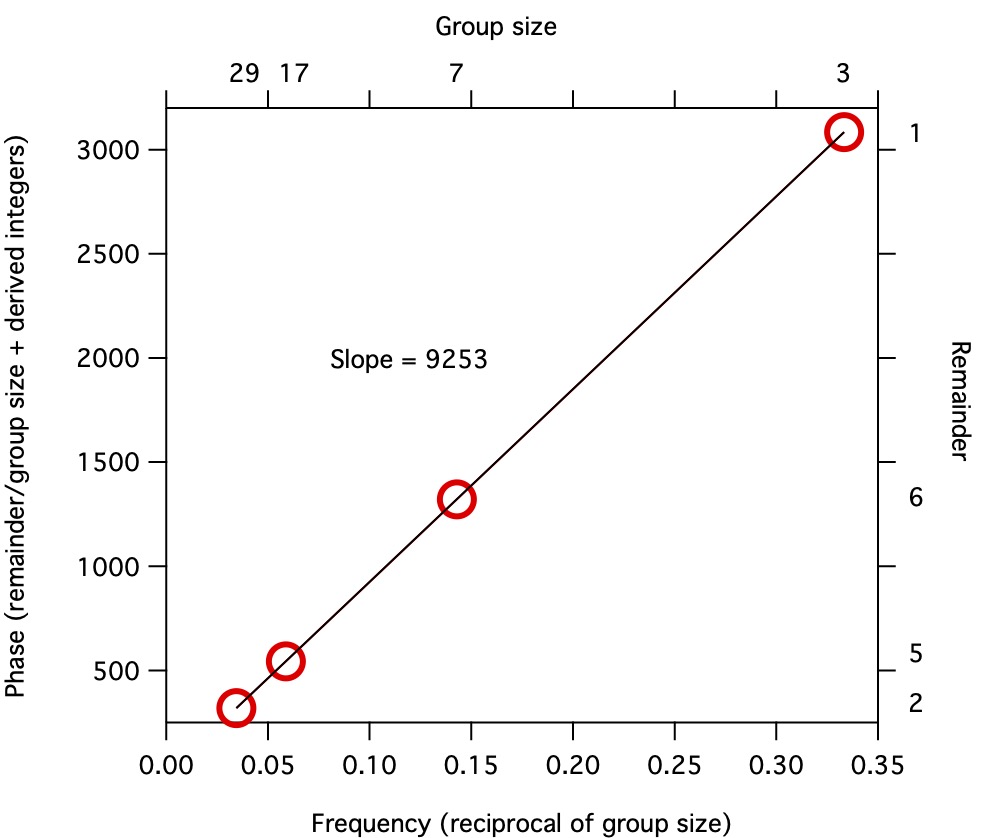

we can easily compute the time between two dates, using the Chinese Remainder Theorem.9

Imagine that you are the Emperor, and want to count how many soldiers you have in your army. You stand on top of a mountain looking down on your troops,

and direct your generals to have the soldiers organize themselves into small groups of 3. They do so easily by shifting their positions a little bit.

But one soldier is left over at the end, the remainder of your desired number after dividing by 3.

You then direct them to form groups of 7. They do so, and you write down that there were 6 soldiers remaining.

You do the same with groups of 17 and 29, finding remainders of 5 and 2.

From those quick manipulations, you can determine the total number of troops, in this case 9253.

This result generalizes. We can do the same thing for data that aren't discrete, using continuous phase and frequency values.

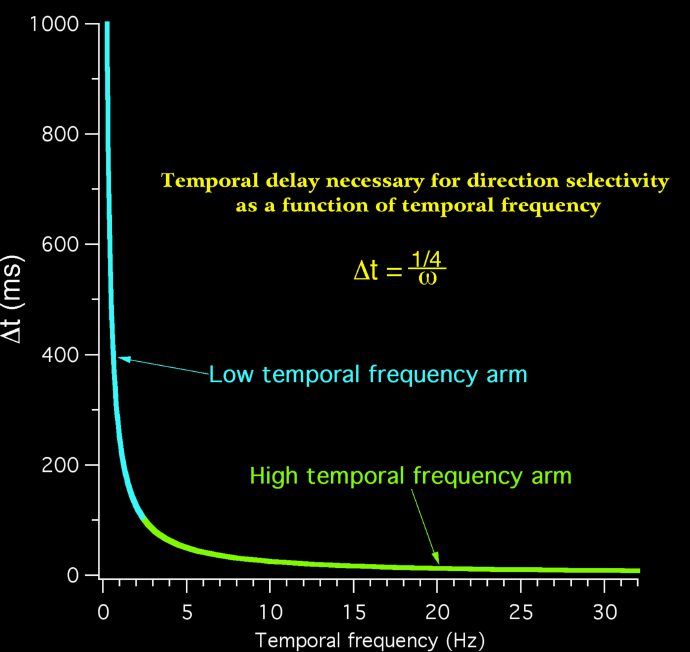

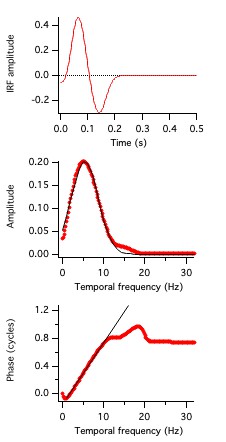

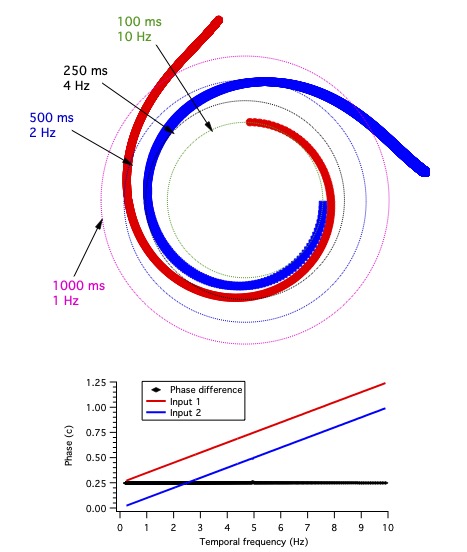

The slope of phase vs. temporal frequency is latency. This is best appreciated by knowing a basic property of Fourier transforms:

f(t-L) ⇔ e2πiLω F(ω)

When people think of latency in the one-dimensional time domain, they generally rely on picking particular moments in time.

These choices do not necessarily reflect the underlying functions (e.g., stimulus and response,

neither of which is typically a "delta" function, infinitely narrow and tall, completely localized in time).

The choices of moments might just be onsets or peaks of those stimulus and response functions, for example.

Because of these choices, that sort of time-domain definition of latency is notoriously unreliable.

In contrast, the definition used here, the slope of phase vs. frequency, is precise, accurate,

and generally applicable, as it is based on the equivalence above.

The addition of integer numbers of cycles to the phase values as described above is not as ill-defined as it might seem.

For our purposes, phase is measured at each of a series of temporal frequencies that are spaced closely enough together

that neighboring phase values are separated by less than a cycle.

The only manipulation that is needed is to ensure that the phase values are slowly increasing as a function of temporal frequency

(e.g., the "unwrap" operation in Igor).

Another way to understand that latency is the slope of phase vs. frequency is to think about what latency does.

If some process responds with a fixed latency to an input, when does the response occur at different frequencies?

Let's take an example where the latency is 100 milliseconds (100 ms). At a frequency of 1 cycle/second

(1 Hz), 100 ms is a tenth of a cycle (0.1 c).

At 2 Hz, one cycle takes half a second, 500 ms, so 100 ms is 1/5 c = 0.2 c.

At 5 Hz, one cycle is 200 ms, so 100 ms is 0.5 c.

At 10 Hz, one cycle is 100 ms, so the phase is 1 c.

If you plot these phase values (0.1, 0.2, 0.5, 1)

against these frequencies (1, 2, 5, 10),

you get a line where phase (in cycles) = 0.1 (s) * frequency (Hz). The slope of the line is 0.1 s = 100 ms.

As frequency gets higher, the period gets shorter, and a latency takes up a longer portion of a cycle.

This hopefully makes it clear that latency is exactly the slope of phase vs. frequency.

This relation can be written as φ=Lω, phase is the product of latency and temporal frequency,

ignoring momentarily what will be explained next.

In the typical experiment where a stimulus evokes a response, both the stimulus and response are extended in time.

The poor localization in time makes it difficult to work in the time domain, but working in the frequency domain,

as we will explain with numerous examples below, facilitates both execution and analyses of experiments.

In the frequency domain, one measures both amplitude and phase as functions of frequency.

Amplitudes typically grow weak at low (too slow) and high (too fast) frequencies.

For our prime interest in timing, phase is more important than amplitude.

As above, phase values increase with frequency, with the slope giving the latency.

The key parameter that describes timing, however, is the intercept of the phase vs. frequency line, the phase at 0 Hz.

Note that this can not be directly measured, since a single cycle at 0 Hz lasts forever,

but is instead extrapolated from the data at low but non-zero frequencies.

Thus, the line that plots phase vs. temporal frequency is φ=Lω+φ0,

where φ0 is the phase at 0 Hz.11

The phase at 0 Hz, what we call absolute phase (analogous to the temperature of absolute zero,

which can not be reached but can be extrapolated as approximately -273°C;

the term is an oxymoron, given that phase is relative), describes the shape of a function of time.

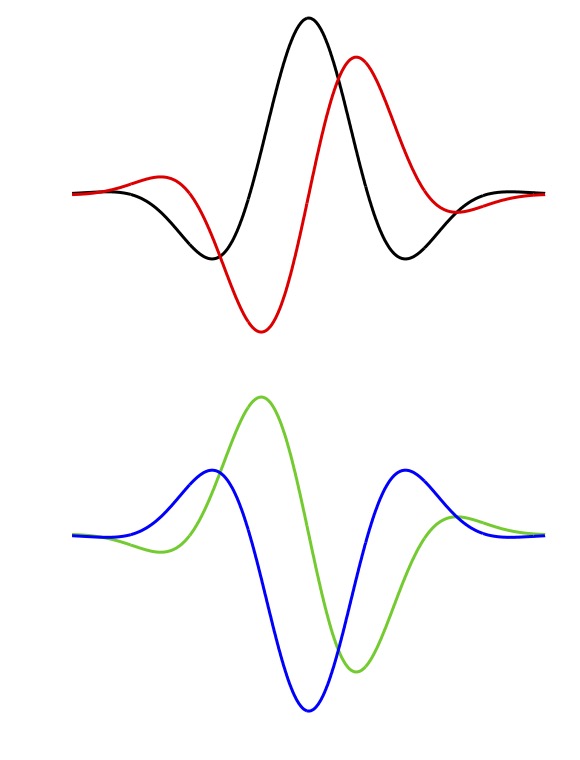

Phase is by definition a relative quantity, and we use a convention where phase is measured in cycles,

with 0 cycles corresponding to the peak of an even (symmetric with respect to time, after accounting for latency) function,

and +0.25 cycles corresponding to an odd (antisymmetric) function with a negative phase preceding its opposing positive phase (Figure 6, black and red curves).

Absolute phase values of 0.5 c and -0.25 c correspond to shifting the black and red functions by a half cycle, giving the blue and green traces.

Absolute phase values just less than 0 c are termed phase leads; phase lags correspond to absolute phase values just above 0 c.

These terms apply to functions with phase values around 0.5 c as well, so that an absolute phase value of 0.4 c is a phase lead.

In the visual system, illustrated with examples below, neurons that respond with phase values around 0 c are called ON-center,

and those with phase values around 0.5 c are called OFF-center.

This is a somewhat unfortunate terminology originating with early studies where only bright stimuli could be easily generated,

so cells that are excited by dark stimuli were tested by turning off the bright stimulus.

Our goal is to characterize how the brain transforms external sensory stimuli into neuronal activity.

These characterizations are simplified by thinking in terms of frequency, amplitude, and phase.

In the frequency domain, any stimulus and any response (at least, that we can generate and measure! -

formally, members of the ℒ2 function space, "square-integrable" functions)

can be characterized in terms of amplitude and phase as functions of frequency.

The system (e.g., the brain) that turns the stimulus into the response is analyzed by correlating the stimulus and the response.

In the time domain, that correlation is a slightly complicated process termed "convolution" (it's convoluted!).

In the frequency domain, it is simply division of the response by the stimulus.

Formally, this is division of complex numbers, but in terms of the real components of amplitude and phase,

it is division of amplitudes and subtraction of phases. The result of this analysis is called a transfer function,

or a kernel, among many names. We will refer to kernels, in both time and frequency domains.

The phase of the kernel is thus the difference of response minus stimulus phases.

The brain or other system changes the stimulus phase into the response phase, and the kernel describes this change.

We model this process in terms of the response R arising from the stimulus S via the kernel K:

So time is two-dimensional, with one dimension being temporal frequency, and the other phase.

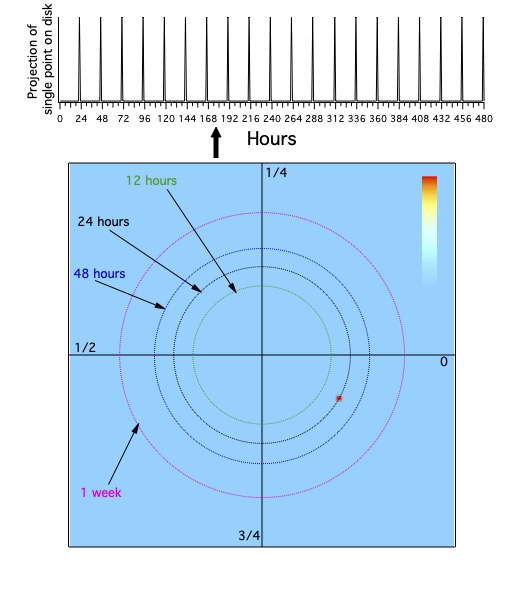

A standard way to think about the two-dimensional space of time is as a disk, with the center point missing (Figure 14).

The distance out from the center is frequency or period, and the distance around the disk along any fixed frequency is phase.

This representation of time is clearly different from the time line!

We are quite familiar with such representations of time. Our clocks tell us what time it is by giving us phase values for some small number of periods.

Analog clocks are versions of the disk, usually showing the hour (the phase over a 12 or 24 hour period) with a short hand,

and the minute (phase over a 1 hour period) with a longer hand, farther out on the disk - so the opposite direction from the center compared with Fig. 14,

where longer periods are farther out.

On our calendars, weeks are laid out, containing 7 days. Some of our activities are centered on certain days,

and thus can be considered as having different absolute phase values. Maybe we tend to eat tacos on Tuesdays,

and worship on Fridays, Saturdays, or Sundays, structuring our behaviors around different positions in the week.

We will see that absolute phase in a neuron corresponds to the timing relative to some phase of the process that affects the neuron,

so that the neuron anticipates, goes along with, or follows behind the timing of the process.

These different neuronal timings combine to provide us with the directions in which things change.

Neuroscientist Dean Buonomano's excellent book

Your Brain is a Time Machine describes many of the phenomena we treat, but he relies on one-dimensional time.

I don't mean to criticize him personally, but think his book makes a fine example of how treating time as two-dimensional gives us more insight.

His emphasis on moments of time leads to all sorts of problems. What's more, he barely touches on direction.

He argues that time is more complicated than space.

Because rodents have neurons called "place cells" that are active when an animal passes through a certain region of their environment,

he claims that their brains have a spatial map. He almost gets it right by noting that their mapping strategy is more flexible than GPS.

A rat that is trained to run down a track will have place cells that are active at different positions along the track;

if you block the track halfway along, the neurons adjust to the new length.

This is to be expected if you realize that the neurons care about the spatial phase along the track.

All of our neurons care about phase, and time is not so complicated when thought of in its natural two dimensions.

Buonomano continues by noting that we can navigate through space but not time.

We will discuss at length how temporal phase gives us the sense of where we are in whatever processes we're involved in.

What counts is that we know whether we're at the beginning, middle, or end (or anywhere in between) of what we're doing.

If we want to restart at the beginning after we've gotten to the middle, we can!

We are able to perform complex tasks in the correct order because we "know" where we are in time, and importantly, where we've been and where we're going.

We are simply misled by the unfortunate conditioning we've undergone claiming that time is one-dimensional.

He notes that we use modular arithmetic (meaning remainders, which is the discrete version of phase) to tell time,

and states that is confusing, especially to children.

He relies on Piaget's studies of how children process time.

What Piaget showed was that children internalize time in terms of the processes that they're involved in.

This intuitive time is exactly how we use time when we're not trapped by the delusion of moments.

Perhaps before we're conditioned to think in terms of the timeline.

We will discuss how time interacts with other dimensions such as space, odor, happiness, and everything else that concerns us,

to provide the information we need for all of our behaviors. What we need to know is how things are changing in time,

and how to make our bodies and the external world change in time. The key notion we emphasize is direction.

Buonomano also has a great summary of work on timing in fruit flies that was carried out by Seymour Benzer and colleagues.

He made an understandable error in claiming that Benzer was a Nobel laureate, whereas he was famously never awarded that particular prize

(Benzer's mother complained that was the only thing her neighbors cared about).

Max Delbrück was instrumental in Benzer's work. They were founders of molecular biology, and in particular how genes affect behavior.

Benzer mutated genes in fruit flies and then cleverly tested the flies to reveal behavioral changes.

His lab identified hundreds of such mutants. A few of the best-known examples have to do with circadian rhythms.

In fruit flies, Benzer could characterize the way cells signal the time of day. The mechanisms behind these cellular clocks are now well-described.

Thinking of the brain as a clock, or really many billions of clocks, we will now describe how neurons tell time.

These cells provide timing information as phase values across a range of temporal frequencies, as do clocks.

Neurons typically produce signals consisting of action potentials, also known as spikes.

These are voltage changes on the time scale of about a millisecond. But little of our behavior happens on that time scale,

so neurons signal by generating series of spikes, often termed spike trains.

A popular idea about what is important about a spike train is that the firing rate,

how many spikes are generated in a given period of time, determines the function of the neuron.

If a neuron fires at 100 spikes per second, it can strongly influence neurons to which it communicates.

But absolute firing rates vary tremendously across the nervous system, so in some neurons,

firing rates of only a few spikes per second can be effective.

What counts is when firing rates go up and down.

That is, what matters is that firing rates vary over time. Consider a neuron that cares about light inputs to the retina.

If light reaching the eye varies in its luminance over time, the neuron's activity is likely to be modulated in time by the stimulus.

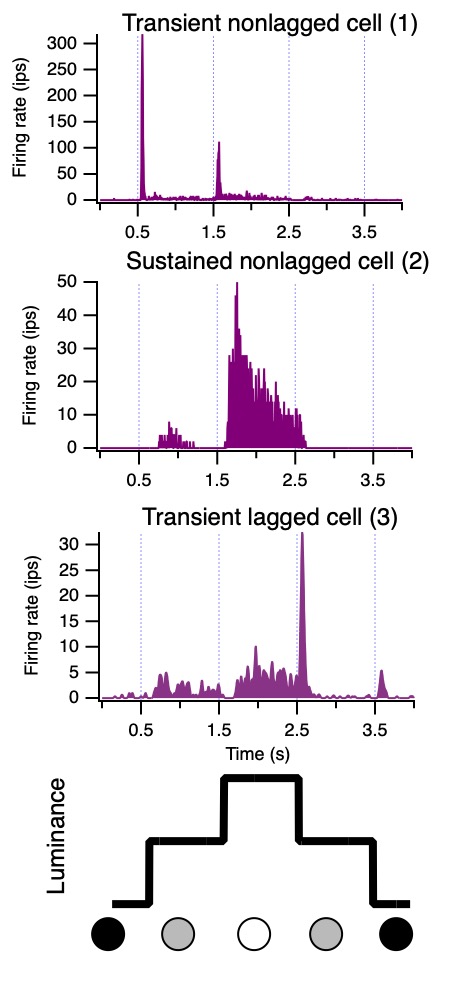

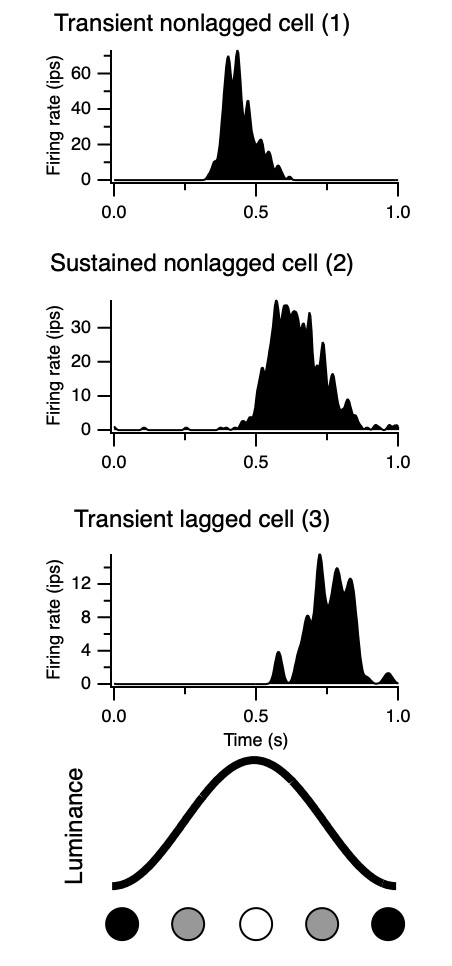

An example is illustrated by Movie 2. The stimulus is a small spot, and the luminance changes over time in a 4-part sequence,

from dark to background to bright to background and back to dark.

The movie shows several cycles of the stimulus, and the audio lets you hear when spikes occur during the stimulus presentation.

Firing rate increases around the times when luminance increases, either from the dark to bright transition or from the background to bright change.

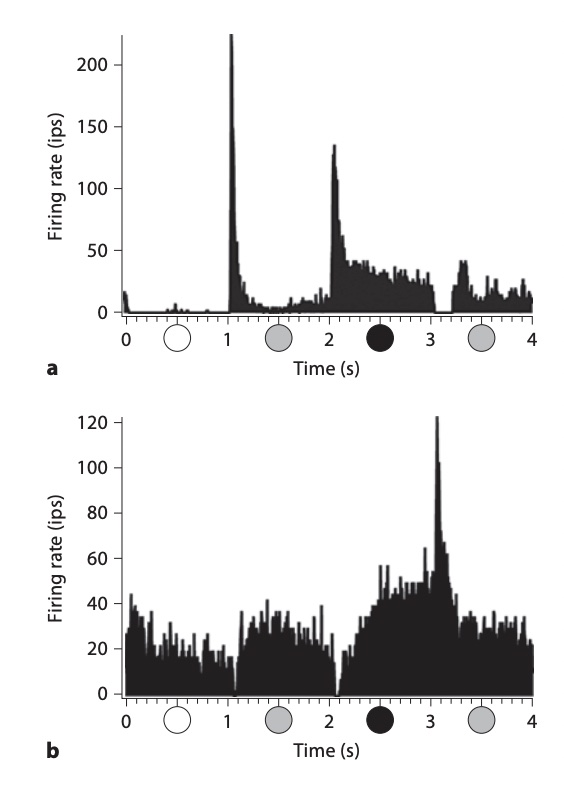

Averaging across many cycles of the stimulus, we show how firing rate

(in impulses per second, "ips", the same as spikes per second) depends on the stimulus (Figure 7).

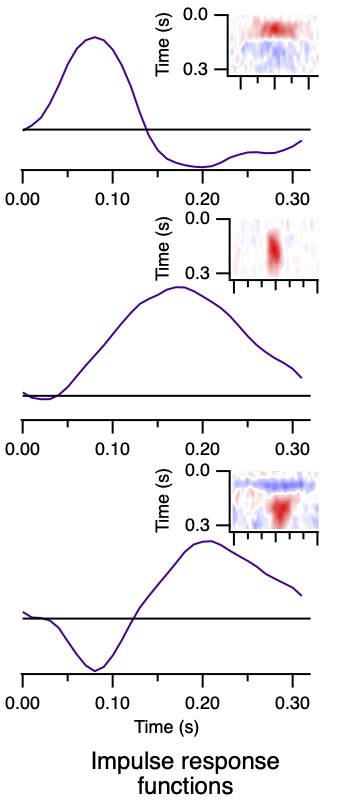

Three neurons illustrate how there is a range of timing. The transient nonlagged cell (1) at the top

responded primarily just at the luminance increases, after 0.5 and 1.5 seconds.

The sustained nonlagged cell (2) also fired at those points, but continued to fire as long as the spot was bright.

The transient lagged cell (3) did not fire at the stimulus onsets, but was active during the period when the spot was bright,

then fired strongly at offset (2.5 s).

This response lags the stimulus, therefore.

The terms transient and sustained have been applied to certain visual neurons since the early 1970s

(Cleland et al. 1971).

Related terms like phasic and tonic are also used.

The term lagged, on the other hand, originates in this context from

Mastronarde 1987.

The most important note is that these terms refer to temporal differences. That fact has often not been appreciated.

Keep in mind that just as many OFF cells exist that fire a half-cycle away from these ON responses,

showing responses similar to these cells but to a stimulus that turns dark rather than bright.

The distinction between ON and OFF cells becomes slightly less important when thinking of two-dimensional time,

but is biologically rooted in the

retinal mechanisms that produce them.

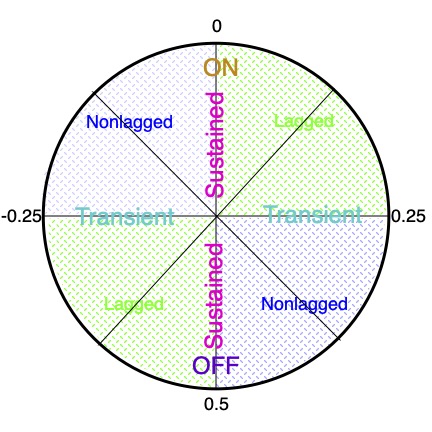

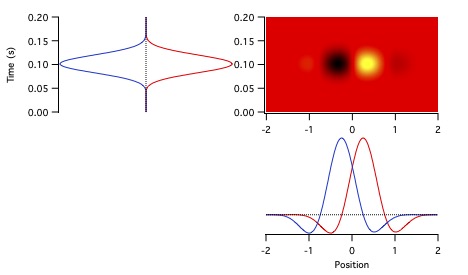

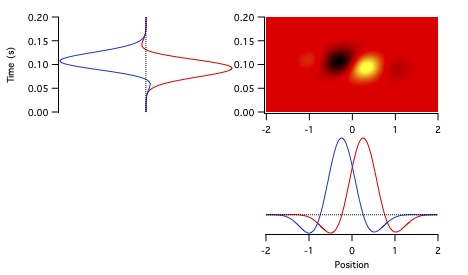

Different cells respond at all of the phases around the cycle (Fig. 9).

ON cells have absolute phase near 0 c, between -0.25 c and +0.25 c,

OFF cells near 0.5 c, between +0.25 c and +0.75 c

(note again that adding an integer number of cycles to a phase value does not change it,

so -0.75 c to -0.25 c is an equivalent range for OFF cells).

Cells in the quadrants between 0 and 0.25 c or between 0.5 and 0.75 c are called lagged cells,

and those in the other two quadrants are called nonlagged cells, roughly speaking.

As noted above, transient cells have absolute phase approaching ±0.25 c,

and sustained cells have absolute phase near 0 or 0.5 c.

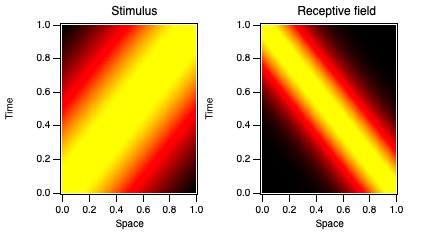

An important concept, really the primary concept, in sensory physiology, is the "receptive field" of a neuron.

The receptive field can be defined broadly to be the set of stimuli that modulate the neuron's activity.

Often, the term is applied to the spatial receptive field, the region in space where stimulation can affect the neuron's firing.

But we will focus largely on the temporal receptive field. This concept is less familiar.

It can be considered to refer to how the neuron turns stimulus timing into response timing (the temporal kernel).

The time domain temporal kernels, also known as impulse response functions, are shown in Fig. 10 for the 3 cells in Figs. 7 and 8.

These are like the traces in Fig. 6 (green, black, and red). This representation of temporal receptive fields is conventional.

Unfortunately, quantifying the differences between these three examples in the time domain is challenging.

However, they are easily described in terms of phase values.

The Fourier transforms of the functions shown in Fig. 10 provide amplitude and phase vs. frequency from which one obtains absolute phase and latency.

More examples of how absolute phase corresponds to response timing are available

(e.g., Figure 11 in Saul and Humphrey 1990;

Figure 9 in Saul 2008;

and Figures 2 and 3 in Saul et al 2005).

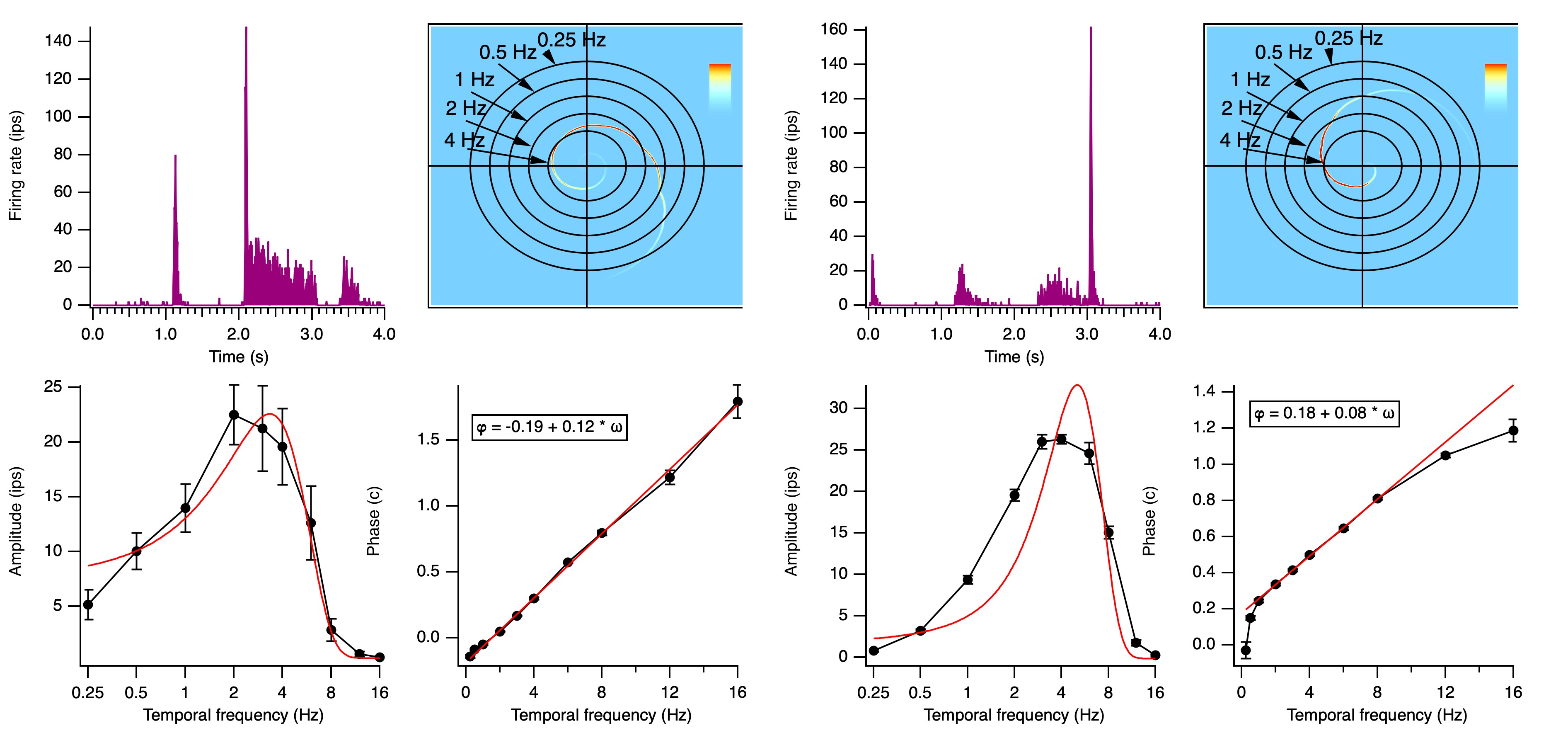

Figure 11 shows the temporal receptive fields of two kitten LGN neurons.

The cell on the left is transient nonlagged, with an absolute phase of about -0.2 c.

Its temporal receptive field therefore lies about 80% of the way to the downward axis at low frequencies.

The transient lagged cell on the right has an absolute phase of about 0.2 c, so starts about 80% of the way to the upward axis.

They are about a quarter cycle apart at 0.5-4 Hz. By 6-8 Hz (portions within the 4 Hz circle), they have similar phase values.

The latencies differ considerably, with the nonlagged cell completing more than 1 c

while the lagged cell doesn't pass through a single cycle over about 8 Hz,

as can be seen in the temporal receptive field plots.

People often ignore what they consider to be messiness in order to supposedly simplify their thinking,

for instance by considering race to be meaningful, or by deciding which sports team or athlete is #1.

We tend to impose artificial thresholds in order to create discontinuities where there are none:

if team A beats team B, A is thought to be clearly superior to B, even though if they played multiple times

they would each win some and lose some, and in reality are probably similar in capabilities.

Whether this tendency to categorize corresponds to the way the brain works innately,

perhaps a property of associative memory,

or comes about through cultural conditioning, remains unclear.

It may be an adaptation for making decisions rapidly,

similar to beliefs. We are nonetheless quite capable of relying on continuous, fuzzy, phase-based information and actions.

Consider the very important topic of moods. We sometimes pretend that sadness and happiness are discrete,

but we know that our sadness can vary, from the sorts of devastation we feel when we lose a loved one,

to when we hear about the death of somebody we don't know, to when we lose a game.

Our moods obviously change over time, often at a very slow pace, but occasionally rapidly.

These changes in mood motivate much of our behavior.

Our goal is to understand some of the neural mechanisms underlying these functions.

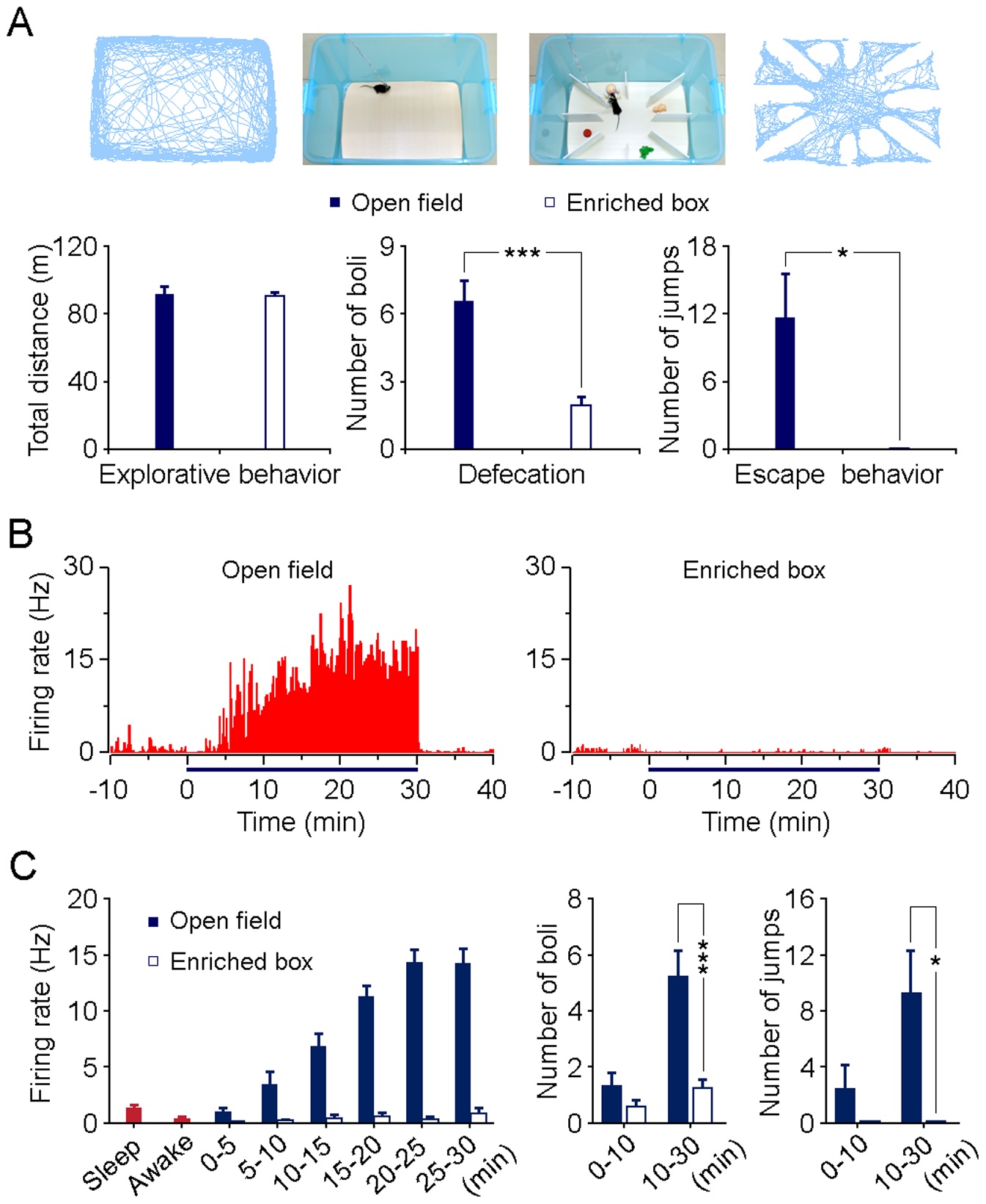

Wang and colleagues 2011

recorded from neurons in the mouse amygdala,

a brain region that is important for emotional processing.

They found that many neurons fired in relation to the anxiety of the mice.

Figure 12 illustrates some of their results.

This is direct evidence that single neurons can show slowly modulated activity,

with phase values that correspond to highly sustained and lagged patterns.

This must be true if you accept that single neurons underlie our behaviors,

but it could be that only activity across populations of neurons would show this.

The transient, sustained, and lagged kitten LGN neurons illustrated above provide an example of how certain human behaviors might be created.

Tallal 1980

studied a common problem seen in 5-10% of children entering primary school.

These children had difficulties that people thought were due to defects in how they processed language.

Tallal and colleagues demonstrated that the defect appears to lie at a low level, and is specific to temporal processing.

These children have deficiencies in processing rapid changes in any modality (auditory, visual, tactile, motor, ...).

I would characterize this as a deficit in transient neural mechanisms,

those that respond best as things are changing rapidly, with a phase that leads the input by about a quarter cycle.

Our speculation is that the neural substrate lies in thalamus, a part of the brain through which almost all inputs to cortex pass, covering all modalities.

The main visual thalamic nucleus is the LGN. Neurons in thalamus vary in their timing, as described above and discussed further below.

Disruption of transient timing in thalamus could produce the problems seen in the children studied by Tallal.

Paula Tallal tells a story about when she would go to children's homes to try to help them.

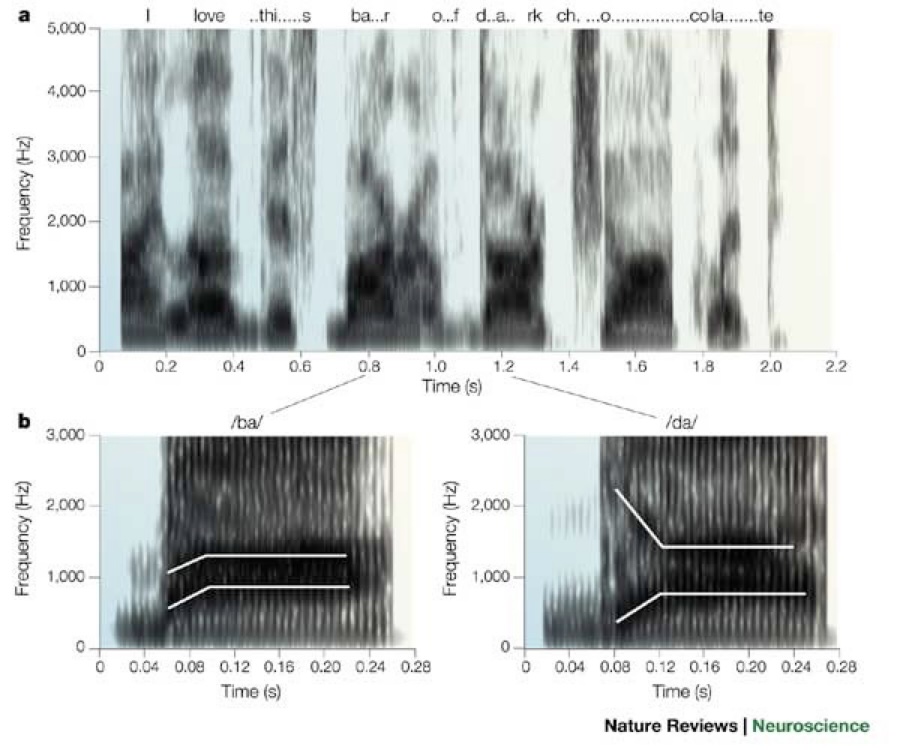

The project used synthesized speech that slowed down the rapid changes in sounds that distinguish consonants like /ba/ and /da/ (Figure 36).

The children would put on headphones to listen to a story generated with the synthesized speech, while playing a video game related to the story.

The affected children would enjoy the story, being able to finally understand what was being said.

The unaffected siblings, on the other hand, would hate listening to the odd speech and would take off the headphones in disgust.

Another large group of children (as well as adults) have attention deficit hyperactivity disorder (ADHD).

This disorder may correspond to a deficit in sustained and lagged mechanisms in thalamus, with phase values near and greater than 0.

Those neurons might be important in sustaining attention, for instance.

They could also play an important role in reinforced learning, as detailed below.

Besides these disorders, processing of time varies across human populations without being considered pathological.

We know some people who are always late. If they need to be somewhere by noon, they only start getting ready at noon.

We speculate that they lack transient neurons that respond ahead of stimuli, with phase leads.

Other people tend to do things ahead of time, getting prepared in advance, getting to appointments early, etc.

They may have an abundance of transient neurons.

It may be difficult to understand this in terms of points on a line, but easy when considering deficits in sustained and transient phase processing.

A misconception that is worth addressing is that many people think analysis in the frequency domain somehow applies only to periodic functions.

This is far from the truth. As noted above, any reasonable function can be examined in the frequency domain,

technically the "square integrable" functions that are mainly characterized by having non-zero amplitudes that don't extend out to infinity in time,

so the amplitude goes to zero at low temporal frequencies. For our purposes, there is nothing about the work described here that entails periodic changes in any variable.

Keep in mind that the description of a function in the frequency domain consists of amplitude and phase across the entire range of frequencies.

Periodic functions are simply those where the amplitude is zero over all but a finite subset of those frequencies.

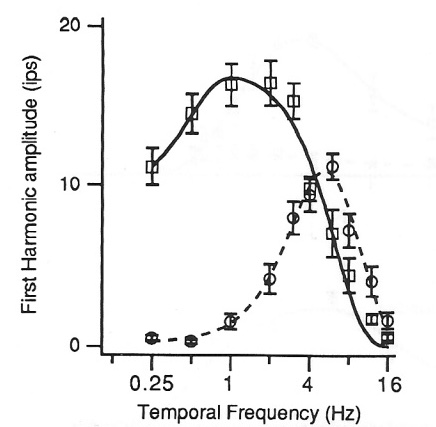

Absolute phase and latency are weakly correlated with the temporal frequency tuning of amplitude.

Lagged cells tend to be tuned to slightly lower frequencies than at least transient nonlagged cells.

The underlying reason might be that cells with longer latencies (Figure 31)

can not respond well at high frequencies simply because their latencies approach and exceed the stimulus periods at those frequencies.

A neuron with a latency of 100 ms can not respond well above 10 Hz (period of 100 ms).

Readers will probably think that much of brain function happens in moments, rather than extended periods of time.

Changes in the external world happen mostly over long time scales. Our internal thoughts and emotions are similarly slow.

Most experiments demand fairly isolated processing. In the real world, we must deal with numerous distractors.

We often need to search through activity that is filtered out as irrelevant before we get to the task at hand.

Because most things change slowly in time, we are less aware of all the things going on at low temporal frequencies,

to which we slowly adapt (see below; Cohen room).

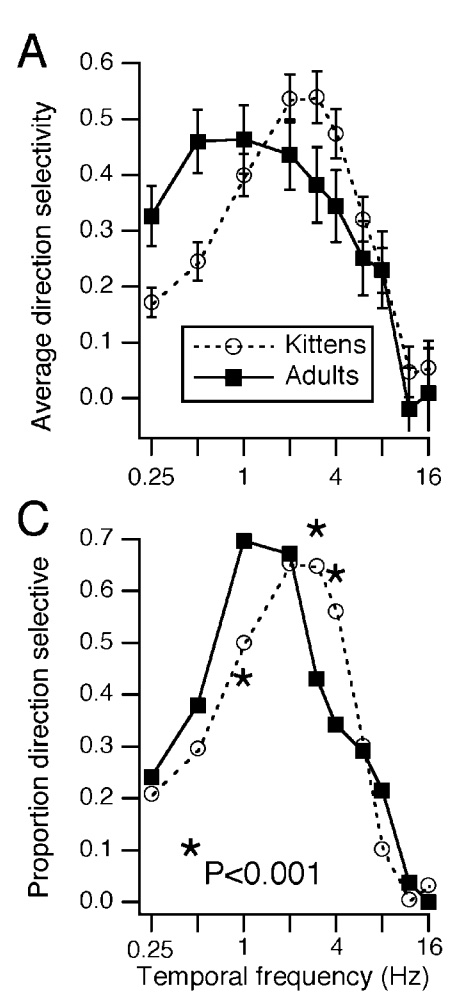

Many authors continue to disregard phase, and write that

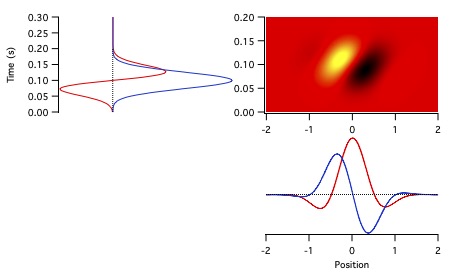

different latencies across a population of neurons could be the basis of direction selectivity.

We will discuss this issue at length, emphasizing that direction selectivity varies with temporal frequency due to the interaction of absolute phase and latency.

We concentrate on time here, but must mention that space should be conceived in an analogous fashion.

We don't say where we are in GPS terms.

We describe our location in relative terms. We live on the south side of town,

and at the current time are upstairs in the middle of the study at the front of the house.

The moon is low in the sky above the tree at the edge of the yard. The brain similarly uses phase to locate us in space.

The hippocampal place cells mentioned above are created from two-dimensional spatial maps in entorhinal cortex.

The entorhinal neurons were termed grid cells.

There are grid cells with different spatial frequencies and phases, mapped across entorhinal cortex.

Elsewhere in the brain, there may be many other spatial mapping strategies, but it's a solid bet that they rely on frequency and phase, just like time.

Our view of time differs from the conventional view. We will now make this more precise,

by describing some mathematical structures that will be relevant for understanding how the brain processes time.

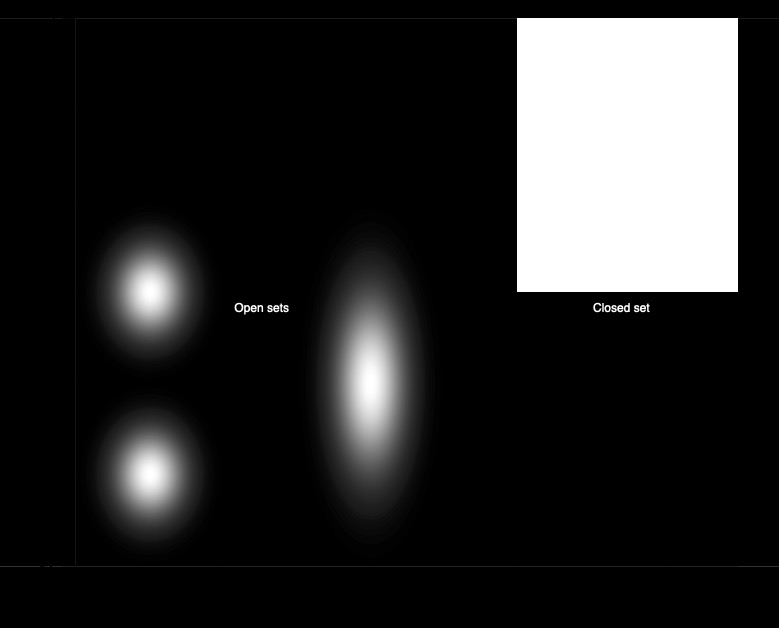

A topological space is a set of points and a set of subsets of those points (called the open sets) that satisfy a few conditions:

the empty set (with no points in it) and the entire set are open sets; any finite intersection of open sets is open;

and any union of open sets, even of an infinite number of open sets, is open.

That is, the set of open sets is closed under finite intersections and all unions (Figure 13).

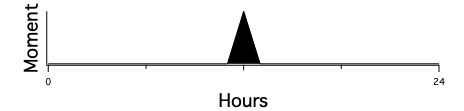

Our point of view is that moments, corresponding to points on the line, do not exist.

Instead, the basic objects of time have extension, and correspond to open sets in two-dimensional time.

Things happen over these open sets, and processes have non-zero durations.

Thus, thinking about the topology of time, we ask what points in time are near each other.

Many people would say that times that are separated by any arbitrary short length of time are near each other,

thereby thinking in terms of the natural topology on the line.

The notion of continuity will arise below, but we won't go into its technicalities.

The key idea is that we think of time in terms of our activities. If we say that it's time to eat lunch (dinner for some people),

sets of times that might be roughly repeated every day are thought of as near each other.

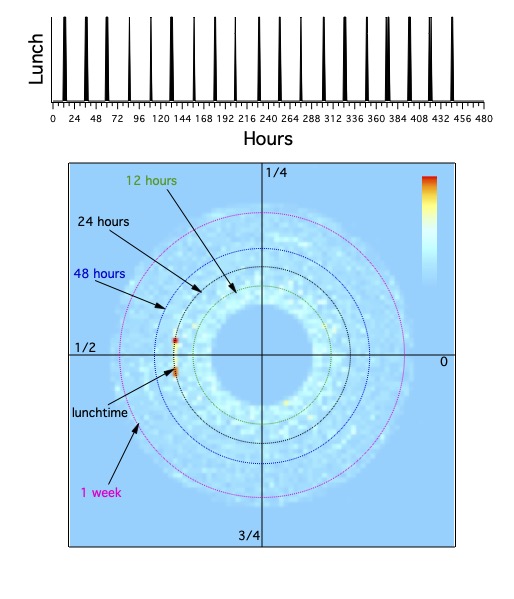

These points form an open set on the line, but it is natural to think of them on the disk,

where a period of a day is a circle that lies at a certain distance from the origin, and lunchtime takes up a small segment of the day.

Note that I've chosen examples of phase values that are easy to understand in terms of fairly natural periods,

especially of one day. This is by no means necessary, it is simply a choice to try to make the explanations simpler.

The disk of time (Figure 14) consists of the set of all periods and phases, with periods positive (greater than 0) real numbers,

and phases having values between 0 and 1,

with 0 and 1 being the same point (so phase takes values on a circle). The disk is the product of the open interval of real values greater than 0, times the circle.

The periods should be plotted logarithmically, so that the distance between a second and a minute (a factor of 60) is the same distance as between a minute and an hour.

One can also think of this as a cone, which may give a better picture of increasing periods with distance from the apex of the cone.

When we walk, we move one leg forward, then bring the other forward, no matter how fast we walk.

This makes sense when thought of in terms of the phases at which different behaviors occur over some periods.

We don't move the right leg forward at a fixed number of seconds after the left leg: that depends on how fast we're walking.

But we generally move our legs in fixed phase relationships. When dancing with a partner, this is usually necessary!

We can map points on the line to the disk, and vice versa. It should be obvious, however,

that these representations of time are different from each other.

For instance, the disk and the cone are equivalent topological spaces, as are the subset of the line between 0 and 1 and the entire line.

Intuitively, two spaces are equivalent if they can be continuously deformed into each other.

The line can not be continuously deformed into the disk.

The most obvious difference between the disk and the line is their dimensionality. As mentioned above, that time is two-dimensional might not seem apparent to people who are heavily tied to the one-dimensional view,

but all of our normal time-keeping is done with two-dimensional displays: calendars showing several weeks and days in each week, clocks with hands showing the phase for at least two periods,

and even digital displays showing the hour, followed typically by a colon to separate that phase from the phase given as the number of minutes in the hour.

Note that a sundial is really one-dimensional, with the topology of the circle: only a single period is displayed, so that the period dimension collapses to a point, a zero-dimensional space.

A single point on the line projects to a set of points on the disk (Movie 4) that form a spiral.

These points all have phases near 0 for long enough periods (periods much longer than the value of the time).

With decreasing period, phase increases. The relation between period, phase and time is t=𝜑⋅pd,

where t is the time, 𝜑 is the phase, and pd is the period.

This relation can also be written as 𝜑 = ωt, where ω is temporal frequency, the reciprocal of period, as pointed out above,

where we discussed that latency (the variable t here) is the slope of phase vs. frequency.

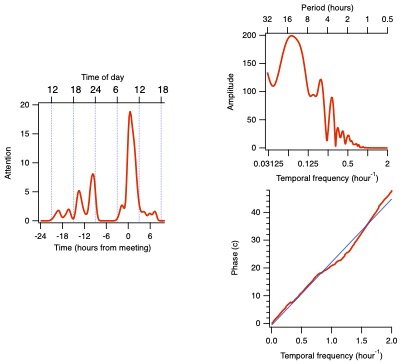

The power of the frequency domain view of time lies in putting together different processes to see how they might be related.

If you want to see how the attention paid to the meeting is related to some other variable, such as release of a stress hormone,

alertness, sympathetic activation, or dopamine release,

you could convolve the rather complicated time-domain function above with a similarly complicated time domain function for your other variable.

Such comparisons on the time line are difficult to quantify. In the frequency domain, on the other hand,

it is mostly a matter of dividing the amplitudes and subtracting the phases between the two functions.

The phase difference tells you how your variables are related: what direction and timing any causal relationship might have.

The sign of the latency gives the direction of causality.

An absolute phase difference near 0 means that your variables are synchronized,

but other absolute phase values give insights into the transformations going on between them.

A function in the frequency domain defines a contour above the disk, with the height being the amplitude,

typically lying along a spiral (𝜑 = Lω + 𝜑0).

Over the range of frequencies where two functions have strong amplitudes,

the differences in the slopes and in the phases at low frequencies determine the relationship between them.

Although the amplitudes contain information that can be important,

the key information lies on the disk, in these differences between the phase vs. temporal frequency information.

That is, the kernel or transfer function that relates the two functions is largely defined as a set of points on the disk

where amplitudes are significant.

It is therefore worthwhile to study the category of functions on the disk.

People are taught about the time line for some good reasons, despite its dangers.

The line can in fact be usefully combined with the disk.

This time-temporal frequency hybrid can take the form of a wavelet transform from the time domain to this time-temporal frequency domain.

The wavelet representation derived from a function of time shows the amplitude and phase of the original function

as a function of frequency over localized regions of one-dimensional time.

One example of how wavelets can provide additional information involves non-stationary processes.

Imagine that you want to understand the relationships between the function showing your attention on a meeting

and a function showing dopamine release over the same time period.

Say that dopamine release tends to be suppressed until after the meeting because of other factors than purely your attention to the meeting.

It is therefore worthwhile to partition your functions into before and after the meeting.

The wavelet versions are suited to these sorts of choices about portions of time where the system might differ because of other factors.

For instance, you may do the same sort of thing every day, like working. If you want to study some aspect of your work life,

you can focus on a daily period and look at the phase behavior over that period.

However, you realize that the results would be skewed if you included days when you didn't work.

So it is worth cutting out those days from your data set.

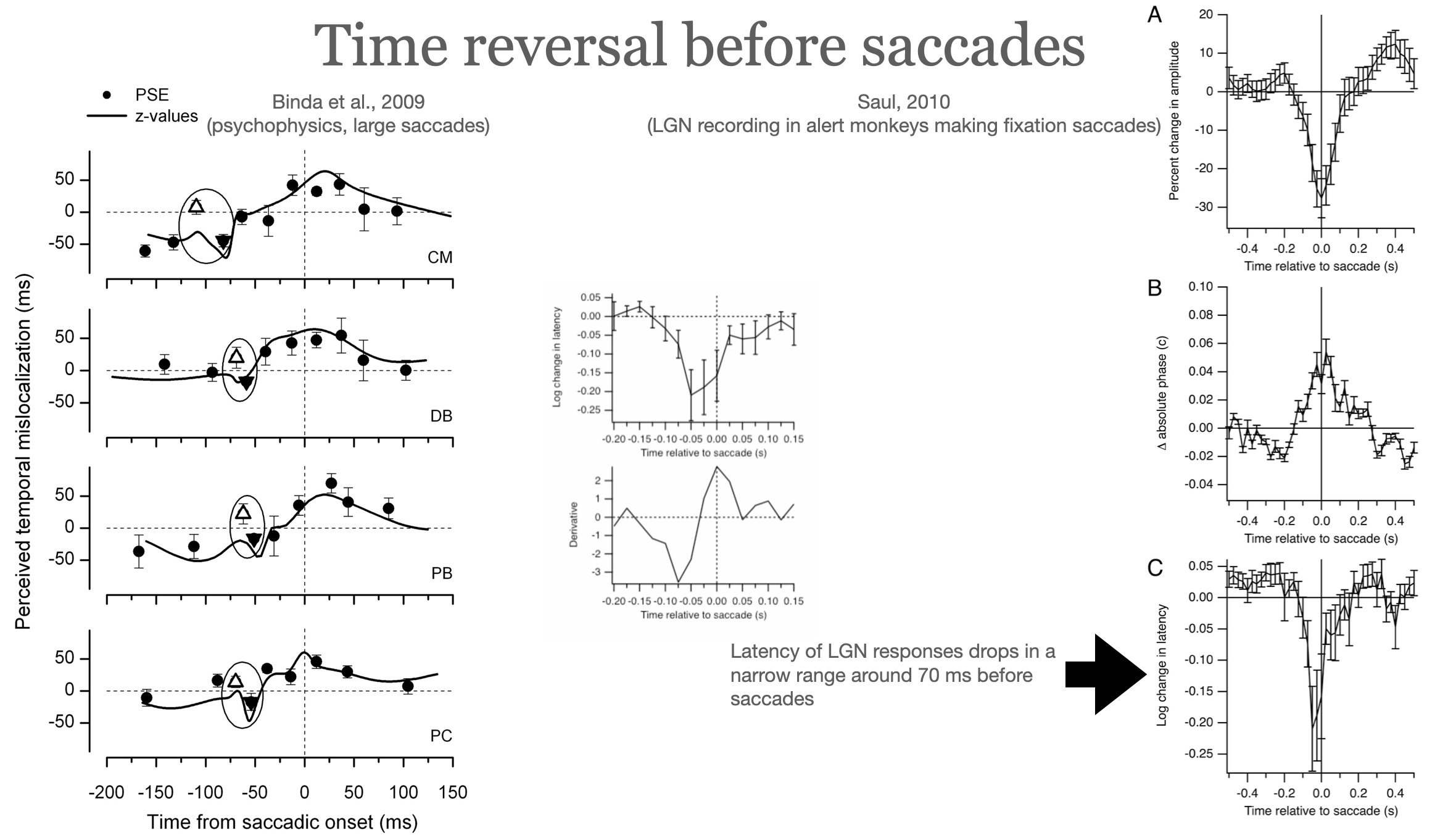

A real example of treating a non-stationary system that way comes from

a study of how visual neurons behave with respect to eye movements.

Monkeys were trained to fix their eyes on a small LED for 5 s at a time. While they were fixating,

visual stimuli were shown away from the LED to evoke activity from a neuron in the lateral geniculate nucleus (LGN) in the thalamus.

These neurons have relatively uncomplicated receptive fields (see below),

with a small region in visual space (spatial receptive field) where they respond with particular temporal profiles (temporal receptive field).

Even while fixating, monkeys (and humans) make small eye movements, typically 1-4 times each second.

We don't notice these fixational saccades (jumps in eye position) despite the fact that they make the world jump around on our retina,

like a film shot by an unsteady hand-held camera. One of the questions posed by this study involves how our brains discount these movements so that we don't notice them.

The hypothesis was that these LGN cells changed their behavior around the times when fixational saccades occur,

so that their timing compensates for the jump across the retina.

Through some complicated analyses, including breaking wavelets up into different temporal epochs relative to when saccades occurred,

we determined that the neurons changed their behavior around the times of the fixational eye movements.

There is a considerable literature about what happens around saccadic eye movements.

The main result has been that amplitudes of neuronal responses to visual stimuli are greatly reduced around the times of saccades.

An interpretation is that we are effectively blinded for a brief time, which may be why we don't notice the shift in the world.

Timing has rarely been considered. We showed that a consistent change in timing as well as amplitude occurs for these small fixational saccades.

Absolute phase increases during the period from about 100 ms before to 100 ms after the saccades

(Fig. 18B; this is the same time course during which amplitudes decline).

This makes responses more sustained. Latency shortens remarkably before the saccades, most strongly about 70 ms beforehand (Fig. 18C).

Another problem involves integrating stimulus locations before and after saccades. In the absence of a saccade,

if a stimulus moves abruptly from one spatial location to another, we notice the change.

If the same change in retinal location is created by a saccade, however, we do not see it as movement of the stimulus in the world.

In the first of these situations, only the stimulus moves, and the background of the world stays put on the retina,

whereas in the second case, both the stimulus and background move.

Note, however, that the changes in neuronal receptive field properties occur before the saccade, so do not depend solely on the relative movement of figure and ground.

The solution could be that the timing changes in the LGN that make neurons more sustained permit cells that were responding to the stimulus prior to the saccade to continue their activity after the saccade,

when other cells that have receptive fields at the new location after the saccade are firing.

The overlapping activity in these cells with different spatial receptive fields on the retina could signal that these locations are equivalent in visual space.

Morrone and colleagues performed related psychophysical experiments on humans (Figure 18).

They flashed a pair of bars at different intervals of time between the flashes,

and in either order (bar A then bar B, or bar B then bar A). They also had the subjects make saccades at different times around the flashes.

In one of their experiments, the subject reported which bar came first.

They obtained a compelling result: when the flashes came fairly close together and about 70 ms before a saccade,

the subjects reliably reported the order wrongly. That is, people consistently say that bar B flashed before bar A when in fact the reverse was true.

The interpretation is that time reverses for us just before we make saccades.

In the Saul study, we noted a sudden,

short duration decrease in latency for the LGN cells at 70 ms before the saccades (Fig. 18C).

We speculate that the Morrone results could be interpreted in terms of this change in latency:

if the first bar flashes 100 ms before the saccade, it would evoke responses at a longer latency than a second bar that flashes at 70 ms before the saccade,

where latencies are greatly shortened. The response to the second bar would then occur before the response to the first bar.

Remember that the responses measured from the neurons in this study were not localized in time.

The analyses needed to be performed in the frequency domain, based on phase in particular.

Working in the time domain alone would not enable these results to be observed.

8 I was teaching calculus to engineering students at West Virginia University, and noticed that a book had been left behind by the previous teacher.

After class, I went to return the book to that professor, but first scanned its content. It was The Geometry of Biological Time, by Art Winfree, a beautiful book in every way.

I found the owner, Ken Showalter, in the Chemistry Department. Ken is a physical chemist, and we wound up talking about the book and many other things.

And wound up collaborating, studying time. I got to spend some wonderful time with the polymath Winfree in the June snow of Utah.

At the time, Ken was working on a chemical reaction that involved the reagents sodium iodate and arsenous acid.

If you put these chemicals in a jar at the beginning of a lecture, they will sit as a transparent liquid.

At some point during the lecture, the fluid in the undisturbed beaker will suddenly turn black.

This is an example of a

clock reaction.

Inorganic chemicals can undergo reactions at regular or irregular times.

Ken had been looking at how the reaction in a tube of this solution can be triggered at one point in space,

creating a wave that traveled down the tube. We worked on analyzing these propagating waves.

I was living on a piece of land we called "Out Yonder" an hour's drive from Morgantown at the time,

working in the woods, cooling off in the pond, then trying to figure out how the waves worked.

Ken and I sent notes (a few years later there would have been email) back and forth about our progress.

He was sorting out the chemistry, and I was playing with the math.

We each came up with the answers from our two perspectives at the same time.

I had seen that the waves could be described in a pretty (closed-form,

meaning easy to write down and read) function if the reaction was a cubic function of iodide concentration.

Ken had seen that the reaction was in fact a cubic. We nailed down how well the predictions fit the data,

determining the speed at which the waves traveled,

and published a paper with Adel Hanna.

That got me started on thinking about time.

I learned many things from Ken, especially about the wonders of collaboration.

Some people don't recognize that Science is about people. But humans are social animals,

and we thrive on our interactions.

The pleasure of doing Science is largely spending time with people you enjoy and appreciate.

Every once in a while you learn something, providing enormous pleasure.

↩

9 My friend Robert Tajima and I took Max Delbrück's course that covered a variety of biological and philosophical topics, including the Chinese Remainder Theorem.

I got to know Max on annual trips to Joshua Tree, with his wife Manny and their children (Tobi works on electronic chips that process visual stimuli,

including direction selective elements that analyze moving images, unfortunately using 1-dimensional time;

Ludina Delbrück Sallam is an Arabic translator; there were two older children I didn't know),

English professors David and Annette Smith's family, and several other undergraduates.

Max kept star charts like the ancients. My last year, he told me how his retinal detachments kept him from being able to make out the stars well enough to continue this work,

and asked if I'd take over his charts. I stupidly declined, fearing I wouldn't do it justice.

Max viewed Science not as building a cathedral, but instead

people piling up stones.

I found his attitudes refreshing and on point.

Max deserved every honor possible, just a giant of a man.↩

10 The right hand side is the Fourier transform of the left hand side here (and the left hand side is the inverse Fourier transform of the right hand side).

The factor that multiplies F(ω) simply produces a phase shift of Lω, so that the latency shift L on the left hand side results in a phase shift of Lω.

The relation between the phase shift and ω is therefore linear with a slope of L.↩

11 Phase is usually not exactly linearly related to frequency, because of linear and nonlinear filtering.

We ignore those complications for simplicity. For most processes, a linear relation between phase and frequency dominates.

This is especially true if amplitude is taken into account,

so that weaker amplitudes are discounted when considering the phase vs. frequency relation.↩

This is one of the biggest problems in neuroscience, as well as elsewhere, the local-global transformation.

Something happens at one time, and something else happens at another time.

How do we know whether or not these things are related, and how do we put them together into a properly ordered sequence?

When we listen to music, we need to integrate hundreds of elements over time,

and we can manage to extract meaning from the total that is clearly not present in any of the parts (Hasson et al. 2008;

Carvalho & Kriegeskorte 2008).

Uri Hasson has explored these issues extensively in several modalities. Note that those studies argue for hierarchical processing.

Almost all functional studies of neurons have considered their local properties, those confined to their limited receptive or movement fields.

For example, visual and somatosensory neurons have spatial receptive fields, the region of visual space or skin where stimulation affects the neuron,

but our perceptions consist of objects that we see or feel.

Motor neurons typically cause a small group of muscle fibers to contract,

but the brain develops networks that plan and execute complex behaviors that employ those simpler motor neurons.

To reiterate the idea presented above, sensory neurons can be thought of as having temporal receptive fields

in the sense of responding at certain phases in the variation of some response property over some range of temporal frequencies.

Different neurons with different temporal phases and different response properties provide inputs to other neurons,

that integrate those inputs to create an emergent response property not present in the inputs.

Motor neurons have movement fields, defined as the movements that the body makes when they are activated.

Single motor neurons are typically local units, but are assembled into more global units in order to execute useful behaviors.

Over the disk of time, a behavior consists of many motor neuron activations that have different phases and combine together to achieve a smooth, accurate movement.

The convergence of these inputs can be thought of as a stitch between them, creating a result that is less local and more global.

One can think of patches of fabric floating above the disk of time, and the brain sews the patches together to produce a larger quilt.

One imagines that there are advantages in creating a smooth, continuous quilt rather than a messy, torn quilt.

Mechanisms for resolving discontinuities are therefore called for, and studying continuity is worthwhile.

We will venture into some abstract mathematics at this point. That will no doubt scare off many readers.

I would urge you to hang on a bit. Hopefully, you will get something out of this. Eugenia Chang provides examples of

how abstract mathematics describes things that we can understand intuitively in her book Unequal

( factors of 30).

The same object does not look the same from different angles, as in the example of the photo of the impossible triangle above.

In general equality and inequality are more complicated than we might expect.

These somewhat vague ideas can be formalized and made more rigorous, via the mathematical field of sheaf theory.

A sheaf is a mathematical object defined as a set of structures called stalks, and a map from the stalks to an underlying topological space.

That map simply says where each stalk is located in the topological space. The stalks can be any sort of object that has values of any kind, with any structure.

A section consists of a set of values from the stalks over an open set in the space. A sheaf has the "gluing" property,

whereby if two sections are the same over the intersection of their underlying open sets,